Truepic CEO Jeffrey McGregor said the incident was just the tip of the “iceberg.” There will be more AI-generated content on social media, and we’re not ready for it, McGregor said.

According to CNN, Truepic wants to solve this problem by providing technology that claims to authenticate media at the time of creation through Truepic Lens. The data-collecting app will tell users the date, time, location, and device used to create the image, and apply a digital signature to verify whether the image is natural or AI-generated.

Fake photo of Pentagon explosion goes viral on Twitter

Truepic, a Microsoft-backed company founded in 2015, said the company is seeing interest from NGOs to media companies, even insurers looking to confirm claims are legitimate.

McGregor said that when everything can be faked, when artificial intelligence has reached its peak in quality and accessibility, we no longer know what reality is online.

Tech companies like Truepic have been working to combat online misinformation for years. But the rise of a new breed of AI tools that can generate images and text from user commands has added urgency. Earlier this year, fake images of Pope Francis in a Balenciaga down jacket and former US President Donald Trump being arrested were widely shared. Both incidents have left millions of people alarmed by the potential dangers of AI.

Some lawmakers are now calling on tech companies to address the issue by labeling AI-generated content. European Commission Vice President Vera Jourova said companies including Google, Meta, Microsoft and TikTok have joined the European Union's voluntary code of practice on combating disinformation.

A growing number of startups and tech giants, including some that are implementing generative AI in their products, are trying to implement standards and solutions to help people determine whether an image or video was created with AI.

But as AI technology advances faster than humans can keep up, it’s unclear whether these solutions can fully solve the problem. Even OpenAI, the company behind Dall-E and ChatGPT, has admitted that its own efforts to help detect AI-generated writing are imperfect.

Companies developing solutions are taking two approaches to solving the problem. The first relies on developing programs to identify AI-generated images after they have been produced and shared online. The other focuses on marking an image as real or AI-generated with a type of digital signature.

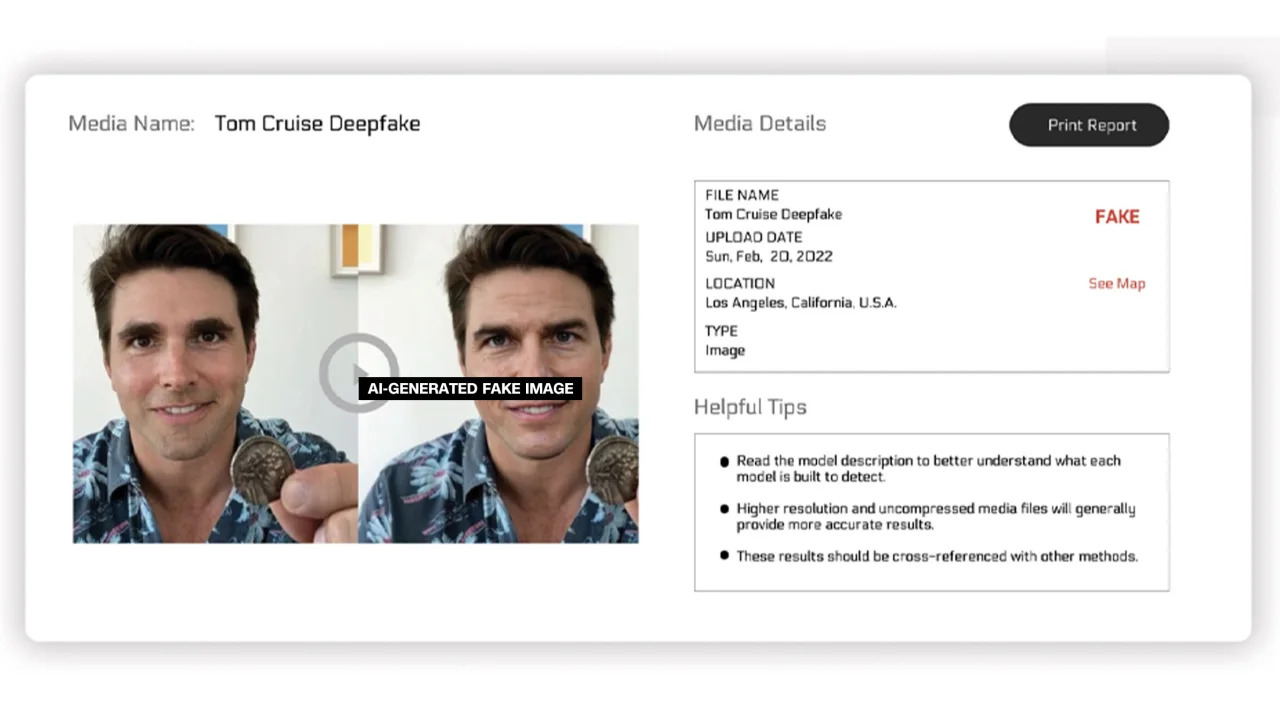

Reality Defender and Hive Moderation are working on the first. With their platform, users can upload images to be scanned and then receive an analysis that shows a percentage of whether the photo is real or AI-generated.

Reality Defender says it uses proprietary deepfake and generative content fingerprinting technology to detect AI-generated video, audio, and images. In an example provided by the company, Reality Defender showed a deepfake image of Tom Cruise that was 53% “suspicious” because the person in the image had a distorted face that is common in manipulated photos.

AI-generated labeled images

These services offer free and paid versions. Hive Moderation says it charges $1.50 per 1,000 images. Realty Defender says its pricing can vary based on a variety of factors, in cases where clients require the company’s expertise and support. Reality Defender CEO Ben Colman says the risk is doubling every month because anyone can create fake photos using AI tools.

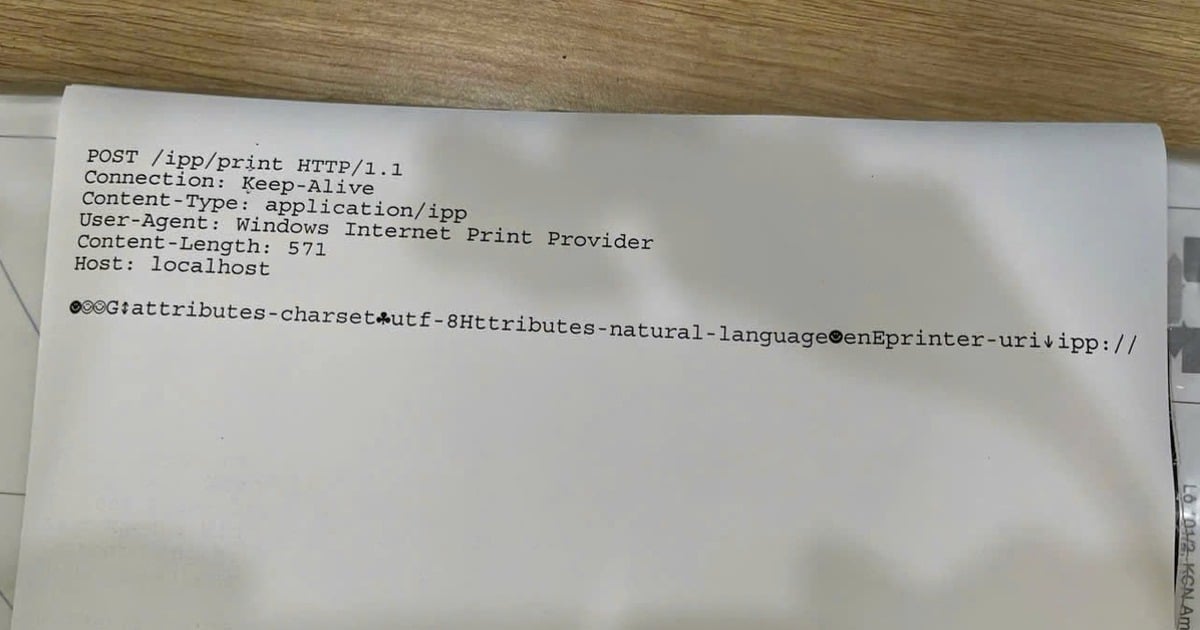

Several other companies are working on adding a kind of label to images to certify whether they are real or AI-generated. So far, the effort has been largely driven by the Content Authenticity and Origination Alliance (C2PA).

C2PA was founded in 2021 to create a technical standard for certifying the origin and history of digital media, combining Adobe's Content Authentication Initiative (CAI) and Project Origin, led by Microsoft and the BBC, with a focus on combating misinformation in digital news. Other companies involved in C2PA include Truepic, Intel and Sony.

Based on the principles of C2PA, CAI will release open-source tools for companies to create content credentials or metadata that contain information about images. According to the CAI website, this will allow creators to transparently share details about how they created an image. This way, end users can access the context around who, what, and how an image was altered — and then judge for themselves how authentic the image is.

Many companies have already integrated the C2PA standard and CAI tools into their applications. Adobe’s Firefly, a new AI imaging tool added to Photoshop, complies with the C2PA standard through its Content Credentials feature. Microsoft also announced that images and videos created with Bing Image Creator and Microsoft Designer will carry cryptographic signatures in the coming months.

In May, Google announced an “About This Image” feature that lets users see when an image first appeared on Google and where it can be seen. The search giant also announced that every Google AI-generated image will carry a markup in the original file to “add context” if the image is found on another website or platform.

While tech companies are trying to address concerns about AI-generated imagery and the integrity of digital media, experts in the field stress that businesses will need to work together and with governments to address the issue. Yet tech companies are racing to develop AI despite the risks.

Source link

Comment (0)