The Department of Information Security recently issued a warning regarding the continued recurrence of high-tech video and image fraud.

Accordingly, the situation of cybercriminals taking advantage of public images and videos of people to edit, cut, and threaten to blackmail with fake videos has been widely warned by authorities to the public.

Using Deepfake technology that allows for the reproduction of a person's sound and image with high accuracy, criminals can impersonate leaders in online meetings, or create videos and calls to commit financial fraud.

Furthermore, these scams often exploit psychological factors such as urgency, fear or power, causing victims to act hastily without carefully checking the authenticity.

Deepfakes are not limited to financial investment scams. Another example is romance scams, where Deepfakes are used to create fictional characters that interact with victims over video calls; after gaining the victim’s trust, the scammer will request money transfers to solve emergencies, travel expenses, or loans.

In light of the above situation, the Information Security Department recommends that people be wary of investment advice from celebrities on social networks; be wary of unidentified messages, emails or calls; and carefully observe unnatural facial expressions in videos.

People also need to limit posting content related to personal information on social networks to avoid having information such as images, videos or voices stolen by bad guys; at the same time, set accounts to private mode to protect personal information.

Talking to reporters of Dai Doan Ket Newspaper, cyber security expert Ngo Minh Hieu, co-founder of the Vietnam Cyber Fraud Prevention Project (Chongluadao.vn) said that the use of Deepfake technology in AI to fake video calls for fraud purposes is still complicated. Subjects exploit this technology to increase the credibility of their "prey".

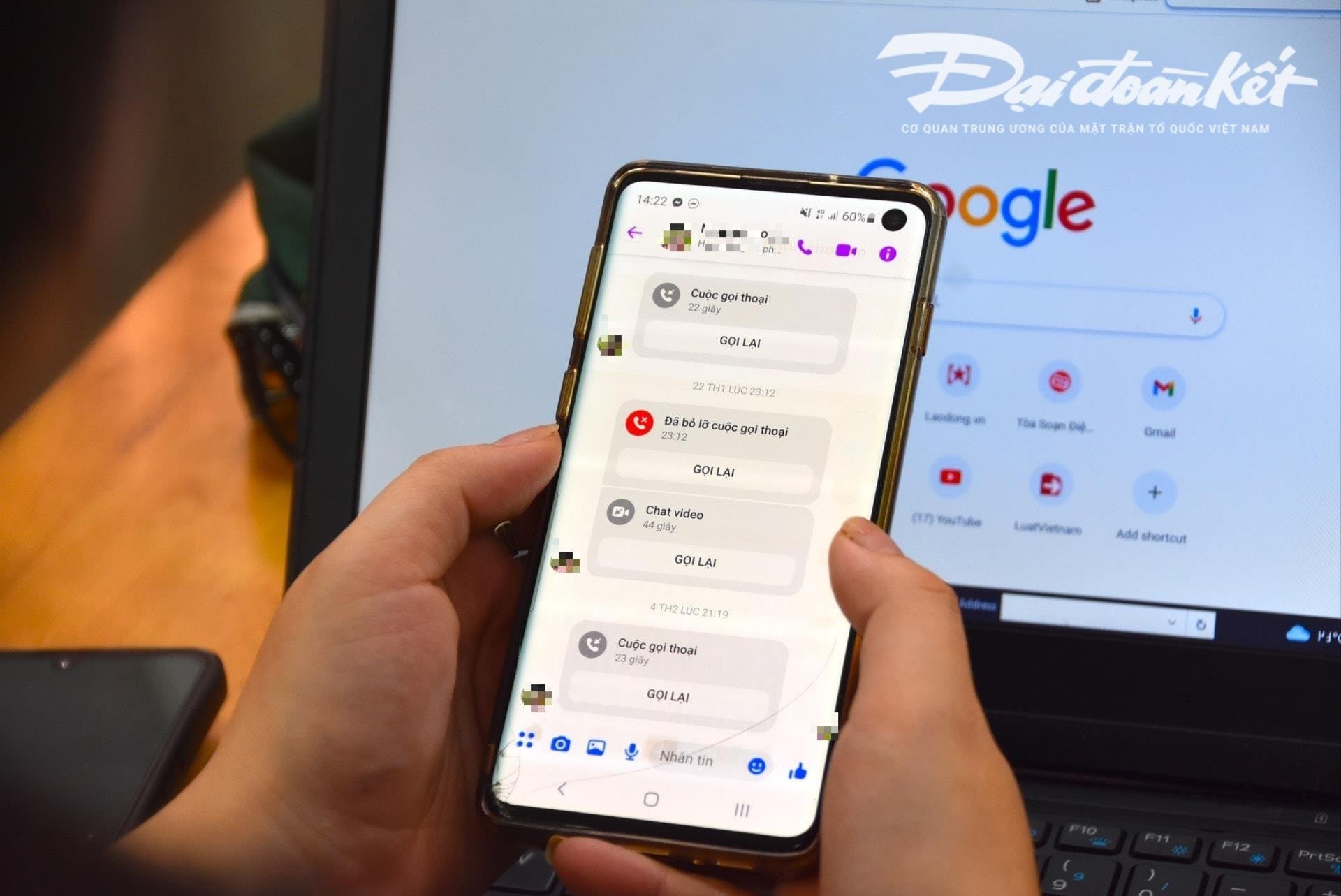

Specifically, the subjects will proactively make video calls to the victims from previously "stolen" images and videos and technically process them, distort the sound or fake image problems to gain the victims' trust.

Usually, these calls are very short, lasting only a few seconds, then the scammer uses the excuse of unstable network, being on the street, etc. to ask the victim to fulfill the scammer's requests.

Experts warn that the use of AI for cyber fraud will likely increase significantly in the near future. Therefore, people must proactively raise their awareness, especially when receiving strange messages, video calls, and links.

According to this expert's analysis, the current Deepfake artificial intelligence (AI) algorithm during real-time calls will not be able to handle it if the caller turns left, turns right or stands up...

One particular weakness that people need to pay attention to when receiving these calls is the teeth. Accordingly, current AI algorithms cannot reproduce the teeth of the person being impersonated.

If using Deepfake, the image of the person opening their mouth may not have teeth, some people have 3 jaws, even 4 jaws. Therefore, the characteristic of teeth is the most recognizable element of a fake call using Deepfake.

“Slowing down” and not following the request immediately is a prerequisite to avoid falling into the scam trap. When receiving any message or call via social networks, people need to call their relatives directly to verify exactly who is contacting them with a minimum time of more than 30 seconds or meet in person.

In case of suspicion of impersonating relatives on social networks to defraud or appropriate property, it is necessary to immediately report to the nearest police agency for timely support and handling.

Source: https://daidoanket.vn/chuyen-gia-chi-meo-nhan-biet-cuoc-goi-deepfake-lua-dao-10300910.html

![[Photo] Overcoming all difficulties, speeding up construction progress of Hoa Binh Hydropower Plant Expansion Project](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/bff04b551e98484c84d74c8faa3526e0)

![[Photo] Closing of the 11th Conference of the 13th Central Committee of the Communist Party of Vietnam](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/114b57fe6e9b4814a5ddfacf6dfe5b7f)

Comment (0)