On March 12, Google introduced Gemma 3, a third-generation open-source AI model that can run on both smartphones and high-performance workstations.

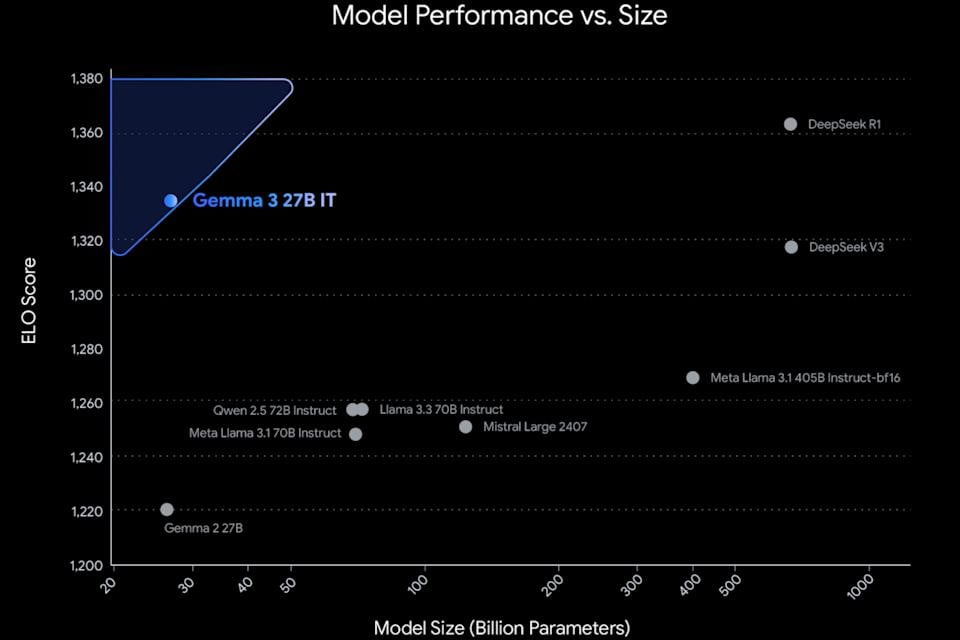

Gemma 3 is available in four variants with 1 billion, 4 billion, 12 billion, and 27 billion parameters. According to Google, this is the world's best single-speed model, which can run on a single GPU or TPU instead of requiring a large cluster of computers.

In theory, this allows Gemma 3 to run directly on the Pixel phone's Tensor Processing Unit (TPU), similar to how the Gemini Nano model runs locally on a mobile device.

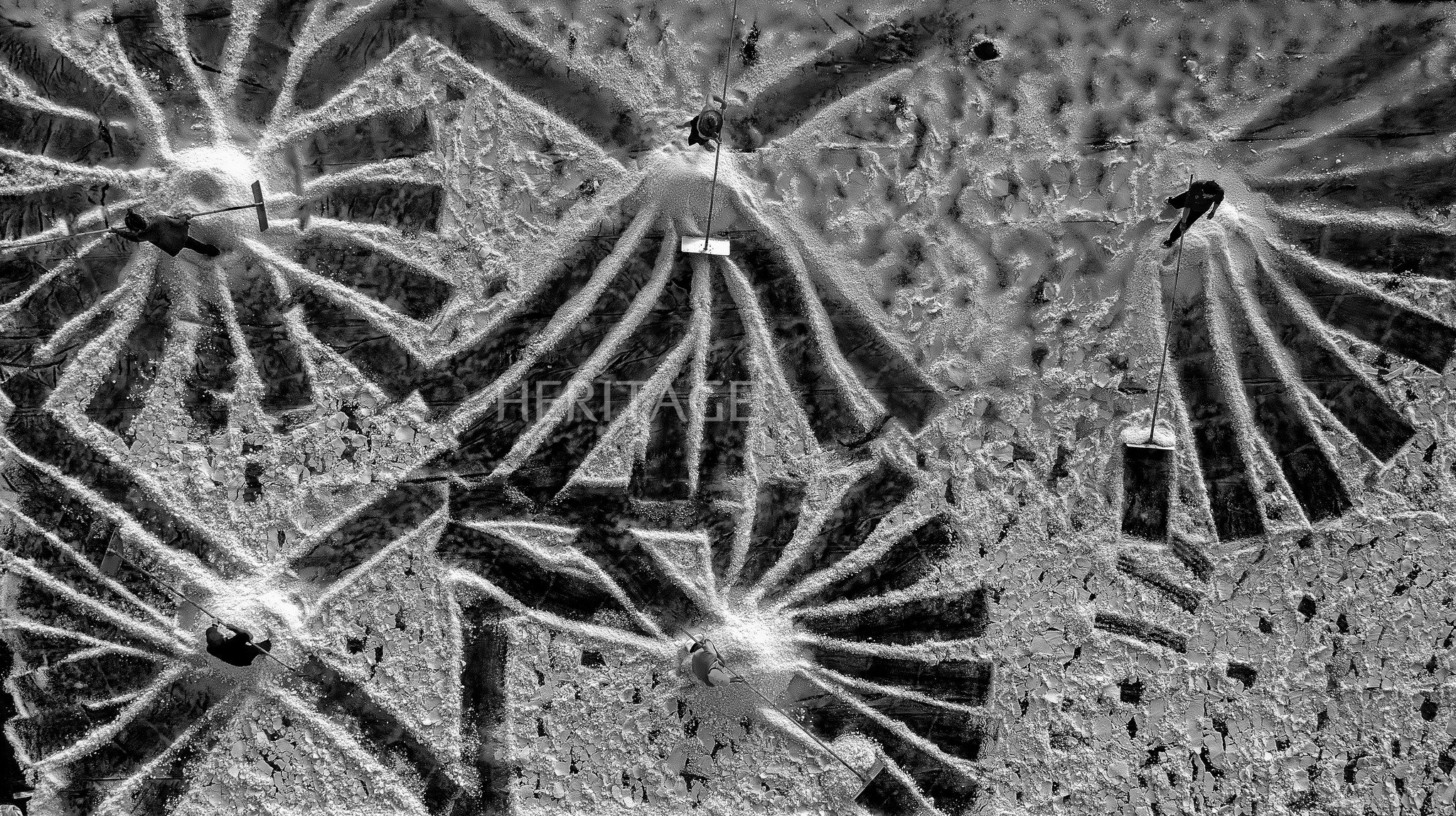

Compared to the Gemini AI model family, Gemma 3's biggest advantage is its open source nature, which makes it easy for developers to customize, package, and deploy on-demand in mobile apps and desktop software. Additionally, Gemma supports more than 140 languages, 35 of which are already available as training packages.

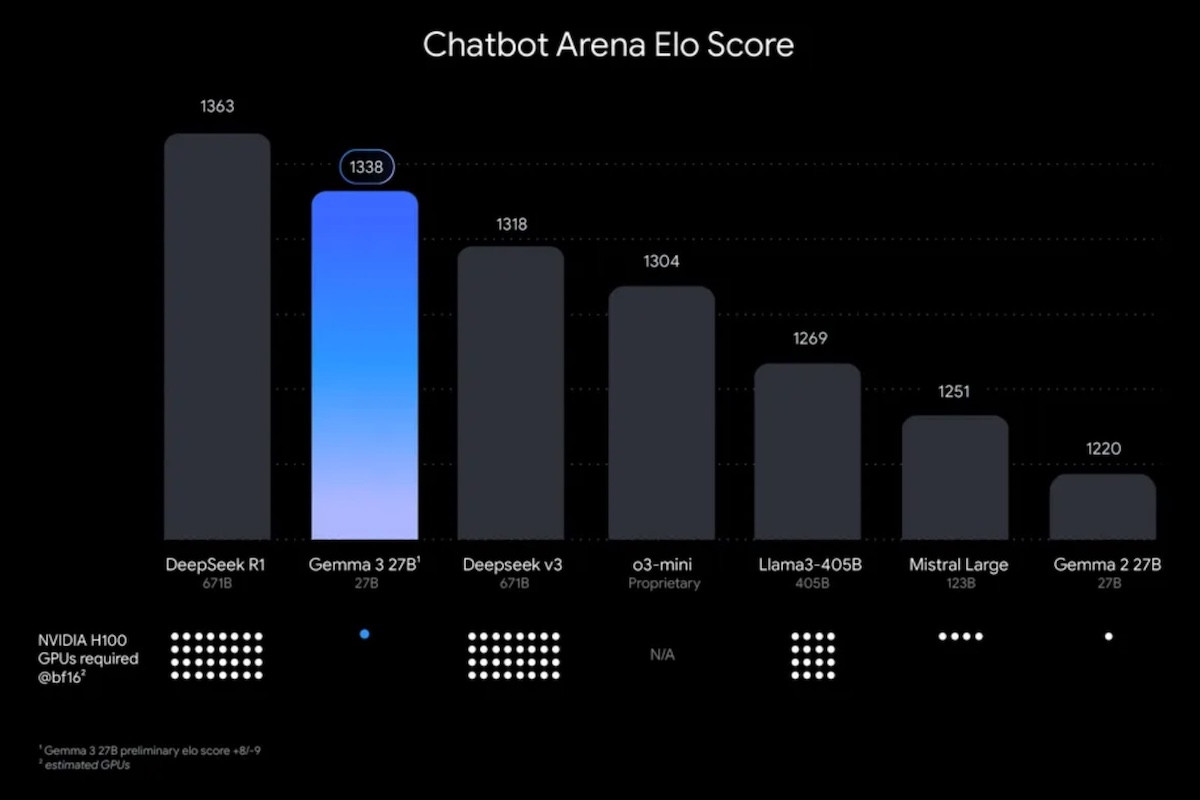

Similar to the latest Gemini 2.0 models, Gemma 3 is capable of processing text, images, and videos. In terms of performance, Gemma 3 is rated higher than many other popular open-source AI models, including DeepSeek V3, OpenAI o3-mini, and Meta's Llama-405B variant.

Context equivalent to 200 pages of book

Gemma 3 supports a context window of up to 128,000 tokens, which is equivalent to the amount of data in a 200-page book. In comparison, the Gemini 2.0 Flash Lite model has a context window of up to 1 million tokens.

Gemma 3 can interact with external datasets and act as an automated agent, similar to how Gemini seamlessly supports work across platforms like Gmail or Docs.

Google’s latest open-source AI models can be deployed locally or through the company’s cloud services, such as Vertex AI. Gemma 3 is now available on Google AI Studio, as well as third-party platforms like Hugging Face, Ollama, and Kaggle.

Google's third-generation open source model is part of an industry trend where companies develop both large language models (LLMs) and small language models (SLMs) in parallel. Google's rival Microsoft is also pursuing a similar strategy with its Phi line of open source small language models.

Small language models like Gemma and Phi are highly regarded for their resource efficiency, making them ideal for running on devices like smartphones. Additionally, their lower latency makes them particularly suitable for mobile applications.

Source: https://vietnamnet.vn/google-ra-mat-gemma-3-mo-hinh-ai-ma-nguon-mo-voi-hieu-suat-vuot-troi-2380097.html

Comment (0)