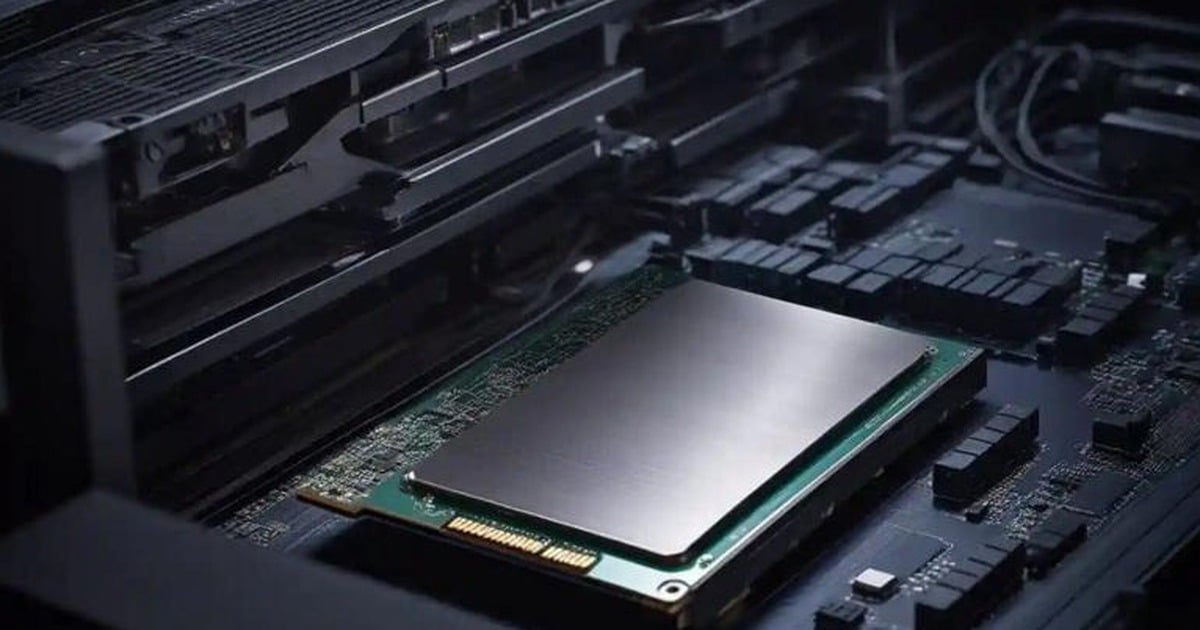

Accordingly, the company that owns ChatGPT and Dall-E made several commitments on AI policy. “The company is committed to working with the US government and policymakers around the world to support the development of licensing requirements for all high-performance AI models in the future.”

The idea of an AI licensing process, co-promoted by industry leaders like OpenAI and the government, is setting the stage for a potential conflict between startups and open-source developers, who see it as an attempt to make it harder to break into the AI space.

At a hearing before the US Congress, OpenAI CEO Sam Altman once raised the idea of establishing an agency responsible for licensing or revoking AI products if they violate established rules.

OpenAI's disclosure comes as other major AI companies like Microsoft and Google are also considering similar moves.

However, the company’s vice president of global affairs, Anna Makanju, said the internal policy document would differ from the policy the White House is expected to release. OpenAI said the company does not “push” but believes licensing is a “practical” way for governments to regulate emerging systems.

“It is important for governments to know whether these super-powerful systems could potentially cause harm,” and “there are very few ways for authorities to know about these systems without self-reporting.”

Makanju said OpenAI only supports licensing AI models more powerful than GPT-4 and wants to ensure smaller startups don't have to bear the regulatory burden.

Accountability and Transparency

OpenAI has also signaled in internal documentation that it is willing to be more open about the data it uses to train image generators like Dall-E. The company says it is committed to a transparent, accountable approach to AI development data.

Analysts noted that the commitments outlined in OpenAI's memo are highly consistent with some of the policy proposals Microsoft made in May. OpenAI noted that, despite receiving a $10 billion investment from Microsoft, it remains an independent company.

Additionally, the company that owns ChatGPT also conducted a survey on watermarks to track the authenticity and copyright of AI-generated images.

In the memo, OpenAI appears to acknowledge the potential risks that AI systems pose to the job market, as well as inequality. The company said in the draft that it would conduct research and make recommendations to policymakers to protect the economy from potential “disruption.”

The company also said it is open to allowing people to access and test its systems for vulnerabilities across a range of areas including offensive content, manipulation, and misinformation, and supports the creation of a collaborative cybersecurity information-sharing hub.

(According to Bloomberg)

Source

Comment (0)