How do fake AI images work?

AI is everywhere these days – even in war. Artificial intelligence applications have improved so much this year that almost anyone can use AI generators to create images that look realistic, at least at first glance.

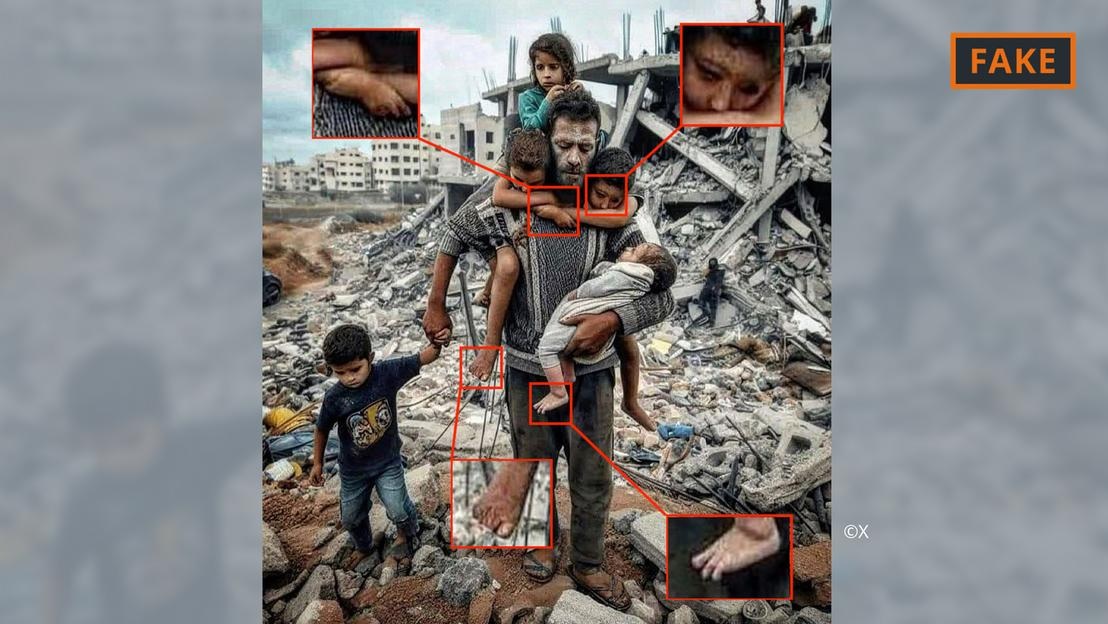

An AI-generated fake photo of the war in Gaza.

Users simply give tools like Midjourney or Dall-E a few prompts, including specifications and information, to do this. The AI tools then convert the text, or even voice, prompts into images.

This image generation process relies on what is known as machine learning. For example, if a creator asks to show a 70-year-old man riding a bicycle, they will search their database to match terms to images.

Based on the available information, the AI algorithm will generate images of the elderly cyclist. With more and more input and technical updates, these tools have improved significantly and are constantly learning.

All of this is being applied to images related to the Middle East conflict. In a conflict where “emotions are so high,” misinformation, including spread through AI images, has a huge impact, says AI expert Hany Farid.

Farid, a professor of digital analytics at the University of California at Berkeley, said fierce battles are the perfect breeding ground for creating and disseminating fake content, as well as stoking emotions.

AI images of the Israel-Hamas war

Images and videos created with the help of artificial intelligence have fueled disinformation related to the war in Ukraine, and that continues to happen in the Israel-Hamas war.

According to experts, AI images circulating on social media about war often fall into two categories. One focuses on human suffering and evokes empathy. The other is fake AI that exaggerates events, thereby inciting conflict and escalating violence.

AI-generated fake photo of father and child in the rubble in Gaza.

For example, the first category includes the photo above of a father and his five children in front of a pile of rubble. It was shared multiple times on X (formerly Twitter) and Instagram, and viewed hundreds of thousands of times.

This image has been flagged by the community, at least on X, as a fake. It can be recognized by various errors and inconsistencies common to AI images (see image above).

Similar anomalies can also be seen in the fake AI image that went viral on X below, which purports to show a Palestinian family eating together on the ruins.

AI-generated fake photo of Palestinian party.

Meanwhile, another image showing troops waving Israeli flags as they march through a settlement filled with bombed-out homes falls into the second category, designed to stir up hatred and violence.

Where do such AI images come from?

Most AI-generated images of conflict are posted on social media platforms, but they are also available on a number of other platforms and organizations, and even on some news sites.

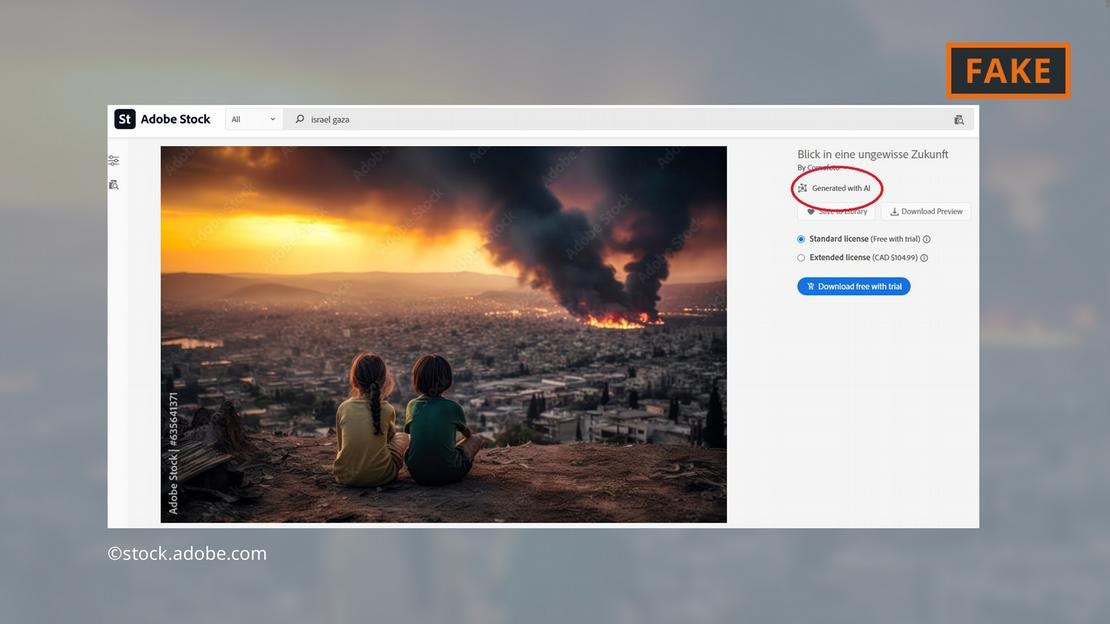

Software company Adobe has caused a stir by adding AI-generated images to its stock photo range by the end of 2022. They are labelled accordingly in the database.

Adobe is also now offering AI images of the Middle East war for sale — like explosions, protesters, or plumes of smoke behind the Al-Aqsa Mosque.

Adobe is offering AI-generated imagery of the fighting in Gaza.

Critics have found this troubling, as some sites have continued to use the images without labeling them as AI-generated. For example, the image featured on the “Newsbreak” page without any indication that it was generated using AI.

Even the European Parliamentary Research Service, the scientific arm of the European Parliament, illustrated an online document about the Middle East conflict with an AI image from the Adobe database — without labeling it as AI-generated.

The European Digital Media Observatory is urging journalists and media professionals to be extremely careful when using AI images, advising against their use, especially when covering real-life events such as the war in Gaza.

How dangerous are AI images?

The viral AI content and imagery is bound to make users feel uneasy about everything they encounter online. “If we get into this world where images, audio, and video can be manipulated, everything becomes suspect,” explains UC Berkeley researcher Farid. “So you lose trust in everything, including the truth.”

That's exactly what happened in the following case: An image of what was said to be the charred corpse of an Israeli baby was shared on social media by Israeli Prime Minister Benjamin Netanyahu and several other politicians.

An anti-Israel influencer, Jackson Hinkle, later claimed that the image was created using artificial intelligence. Hinkle's claim was viewed more than 20 million times on social media and led to heated debate on the platform.

Ultimately, many organizations and verification tools declared that the image was real and Hinkle's claim was false. However, it is clear that no tool can help users easily regain their lost trust!

Hoang Hai (according to DW)

Source

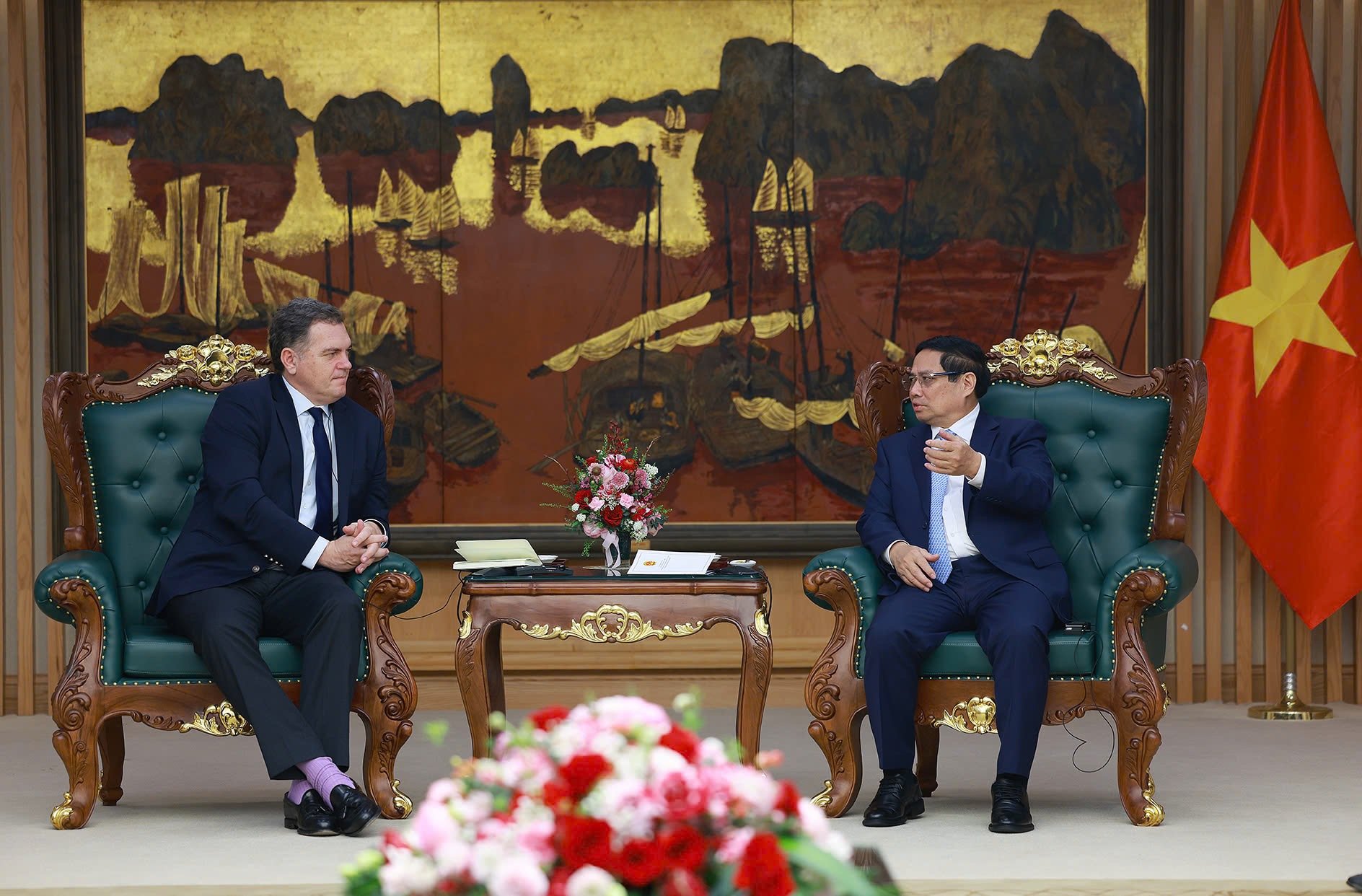

![[Photo] Prime Minister Pham Minh Chinh receives French Minister in charge of Transport](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/aa649691f85546d59c3624b1821ab6e2)

![[Photo] Prime Minister Pham Minh Chinh receives the head of the Republic of Tatarstan, Russian Federation](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/7877cb55fc794acdb7925c4cf893c5a1)

![[Photo] Overview of the Workshop "Removing policy shortcomings to promote the role of the private economy in the Vietnamese economy"](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/d1c58c1df227467b8b33d9230d4a7342)

![[Photo] Meet the pilots of the Victory Squadron](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/fd30103acbd744b89568ca707378d532)

Comment (0)