After the startup OpenAI launched its first AI application, ChatGPT, in late 2022, a wave of competition to develop AI applications was triggered, especially generative AI, bringing many conveniences in all areas of life. However, this also brings many risks.

Invasion of privacy

In recent years, many organizations and individuals have suffered losses when cybercriminals used AI to create video clips that faked the images and voices of real people. One example is the Deepfake trick.

According to the Identity Fraud Report published by Sumsub at the end of November 2023, Deepfake scams globally increased 10 times in 2022-2023. This is also the time when artificial AI applications exploded in the world.

Status Labs notes that Deepfakes have had a major impact on culture, privacy, and personal reputation. Much of the news and attention around Deepfakes has focused on celebrity porn, revenge porn, disinformation, fake news, blackmail, and scams. For example, in 2019, a US energy company was defrauded of $243,000 by a hacker who faked the image and voice of the company’s executives and asked employees to transfer money to partners.

Reuters reported that in 2023, about 500,000 Deepfake videos and audios were shared across social networks worldwide. In addition to Deepfakes for fun, there are tricks created by bad guys to scam the community. According to sources, in 2022, it is estimated that Deepfake scams worldwide caused losses of up to 11 million USD.

Many technology experts have warned about the negative effects of AI, including intellectual property rights and authenticity, and further, intellectual property disputes between "works" created by AI. For example, one person asks an AI application to draw a picture with a certain theme, but another person asks AI to do the same, resulting in paintings with many similarities.

This is very likely to lead to disputes over ownership. However, to date, the world has not yet made a decision on copyright recognition for AI-generated content (recognizing copyright for individuals who order creative AI or companies that develop AI applications).

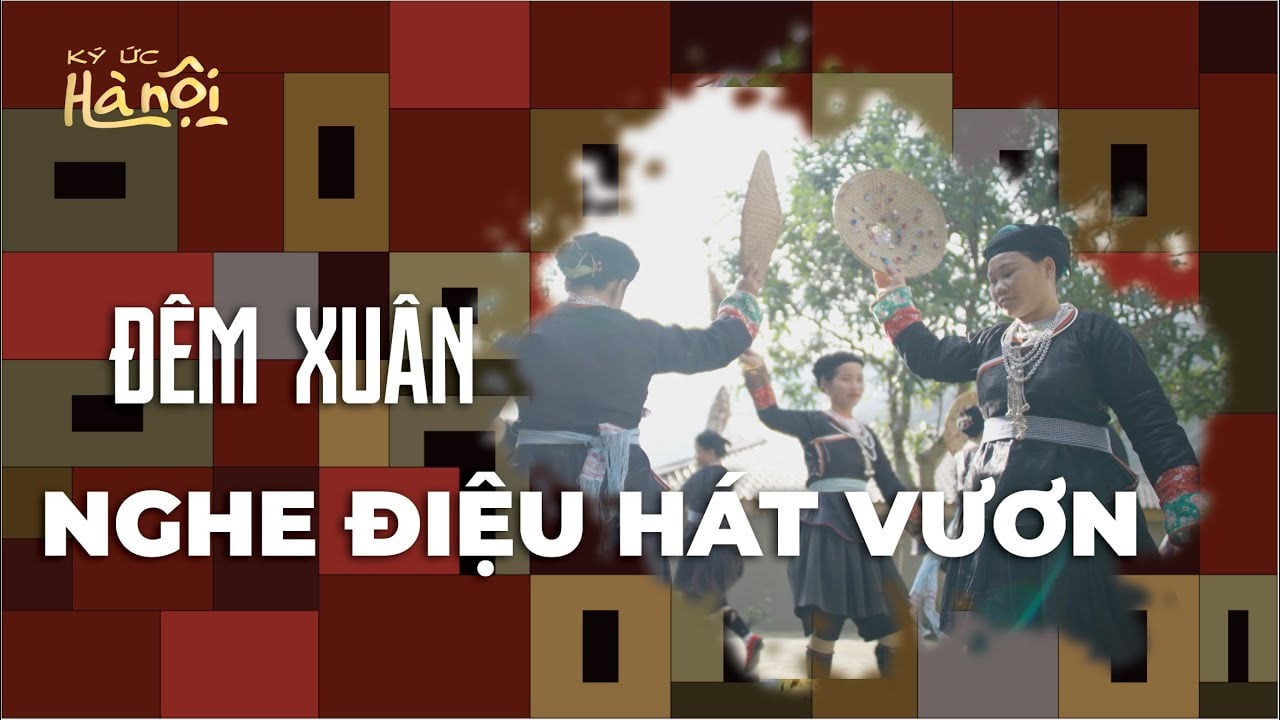

An image generated by an AI application

Difficult to distinguish between real and fake

So can AI-generated content infringe copyright? In terms of technology, AI-generated content is synthesized by algorithms from data that it has been trained on. These databases are collected by AI application developers from many sources, mainly from the knowledge base on the internet. Many of these works have been copyrighted to their owners.

On December 27, 2023, The New York Times (USA) sued OpenAI (with ChatGPT) and Microsoft, claiming that millions of their articles were used to train AI chatbots and AI platforms of these two companies. The evidence is that there is content created by chatbots at the request of users that is the same or similar to the content of the articles. This newspaper cannot ignore when their "intellectual property" is used by companies for profit.

The New York Times is the first major US newspaper to file a copyright lawsuit related to AI. It is possible that other newspapers will follow suit in the future, especially after The New York Times’s success.

Previously, OpenAI reached a copyright licensing agreement with the Associated Press news agency in July 2023 and Axel Springer - a German publisher that owns two newspapers Politico and Business Insider - in December 2023.

Actress Sarah Silverman also joined a pair of lawsuits in July 2023, accusing Meta and OpenAI of using her memoir as training text for AI programs. Many writers also expressed alarm when it was revealed that AI systems had absorbed tens of thousands of books into their databases, leading to lawsuits from authors such as Jonathan Franzen and John Grisham.

Meanwhile, photo service Getty Images has also sued an AI company for creating images based on text prompts due to unauthorized use of the company's copyrighted visual material...

Users may run into copyright issues when they "carelessly" use "works" that they have asked AI tools to "create". Experts always recommend using AI tools only for searching, collecting data, and making suggestions for reference purposes.

On the other hand, AI applications can confuse users when they cannot distinguish between the truth and falsehood of a certain content. Publishers and newspaper offices can be confused when receiving manuscripts. Teachers also have trouble knowing whether students' work uses AI or not.

The community will now have to be more vigilant because it is not known what is real and what is fake. For example, it will be difficult for the average person to detect whether a photo has been "enchanted" or edited by AI.

Legal regulations on AI use are needed

While waiting for application tools that can detect AI intervention, management agencies need to soon have clear and specific legal regulations on the use of this technology to create private content. Legal regulations need to show everyone that content and works have been intervened by AI, such as attaching a watermark by default to images that have been manipulated by AI.

Source: https://nld.com.vn/mat-trai-cua-ung-dung-tri-tue-nhan-tao-196240227204333618.htm

Comment (0)