At its annual GTC conference, Nvidia announced its latest advances in the race to dominate the artificial intelligence (AI) chip market with two new flagship product lines, Blackwell Ultra and Vera Rubin.

According to CEO Jensen Huang, Blackwell Ultra is expected to launch in the second half of 2025. Meanwhile, Vera Rubin - the next-generation GPU architecture will appear in 2026, followed by Rubin Ultra in 2027.

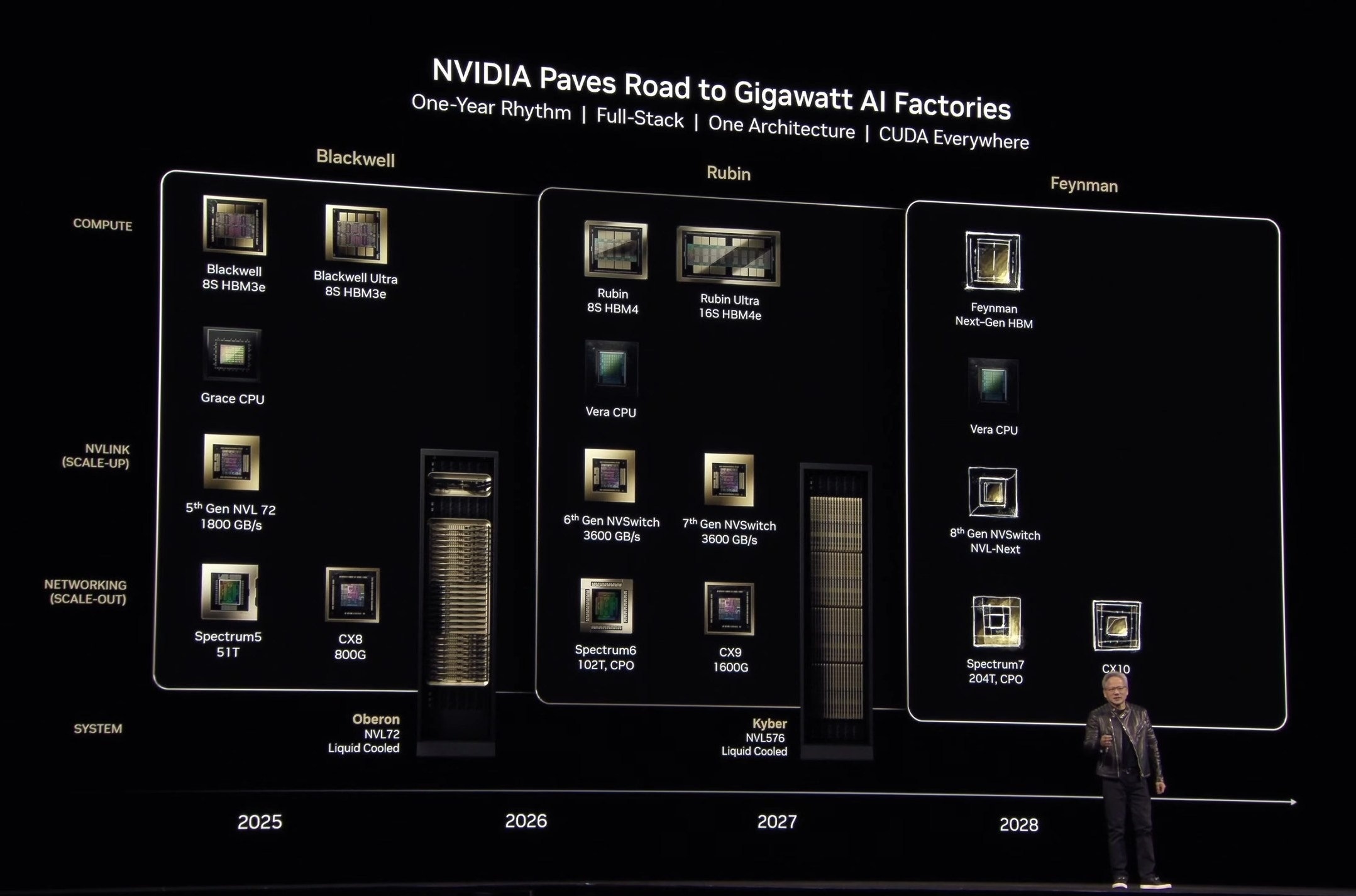

These announcements not only demonstrate Nvidia's superior technological prowess, but also mark a change in the company's product release strategy, moving from a biennial to an annual cycle.

Blackwell Ultra: A Big Step Forward on the Blackwell Platform

In 2024, the Blackwell AI chip took center stage. According to the chip giant, processors based on the Blackwell architecture, such as the GB200, bring a huge performance upgrade for businesses applying AI.

However, it was only recently that the Blackwell began to be shipped in large numbers following delays associated with mass producing the complex design.

Rumors swirled in the industry about design flaws in the platform, with the original Q3 2024 shipping window now moving to 2025. Half of NVIDIA’s investors questioned Blackwell’s claims in a post-earnings call.

To "compensate", Jensen Huang introduced an upgraded version of this chip line, called Blackwell Ultra, during his speech at the event.

|

Nvidia's roadmap for new GPU generations. Photo: Nvidia. |

Specifically, Blackwell Ultra is an upgraded version of the Blackwell architecture, focusing on optimizing performance and memory for increasingly complex AI tasks.

While not a completely new architecture, Blackwell Ultra still offers significant improvements over the previous generation, especially the H100 (launched in 2022), the chip line that laid the foundation for Nvidia's success in the AI field.

Accordingly, a single Blackwell Ultra chip has AI performance of 20 petaflops, equivalent to Blackwell, but is equipped with up to 288 GB of HBM3e memory. This upgrade is a significant increase over Blackwell's 192 GB of memory. This is especially important for large AI models that require fast data access.

Compared to H100, Blackwell Ultra delivers 1.5x FP4 inference performance and significantly accelerates "AI reasoning".

The NVL72 cluster using Blackwell Ultra can run the large DeepSeek-R1 671B language model and return an answer in just 10 seconds, a leap from the 1.5 minutes of the H100. Notably, the new chip is capable of processing up to 1,000 tokens per second, 10 times more than the H100.

According to Nvidia, major customers have purchased three times more Blackwell chips than Hopper, demonstrating strong confidence and demand for the architecture.

Next-Gen GPU Architecture for 2026

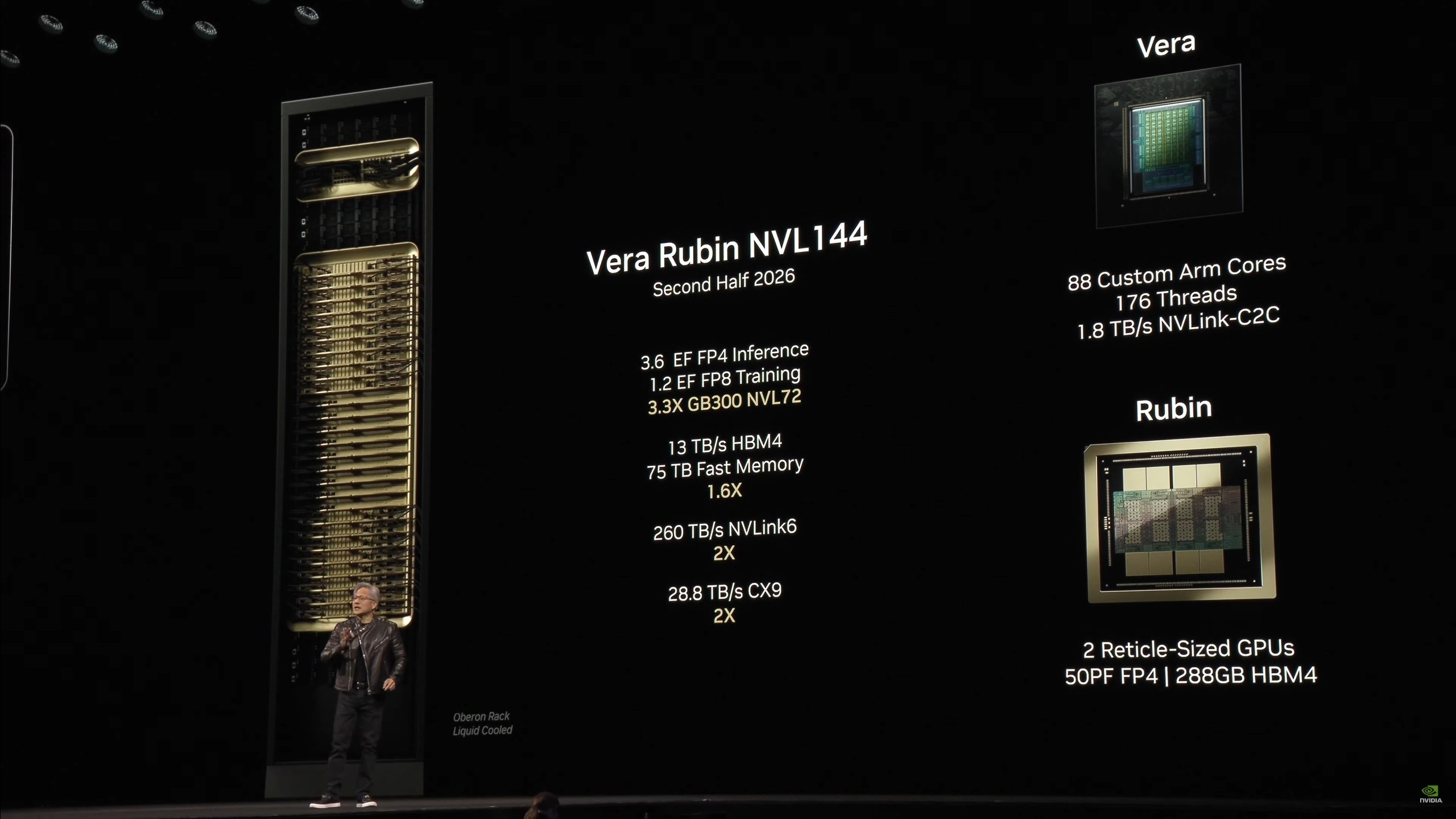

Following Blackwell Ultra, the chip giant revealed its next-generation GPU architecture called Vera Rubin, expected to launch in the second half of 2026. This system includes two main components: the Vera CPU (Nvidia's first custom CPU design based on the Olympus architecture) and the Rubin GPU.

According to The Verge , the Vera CPU is designed to be twice as fast as the CPU used in the 2024 Grace Blackwell chip.

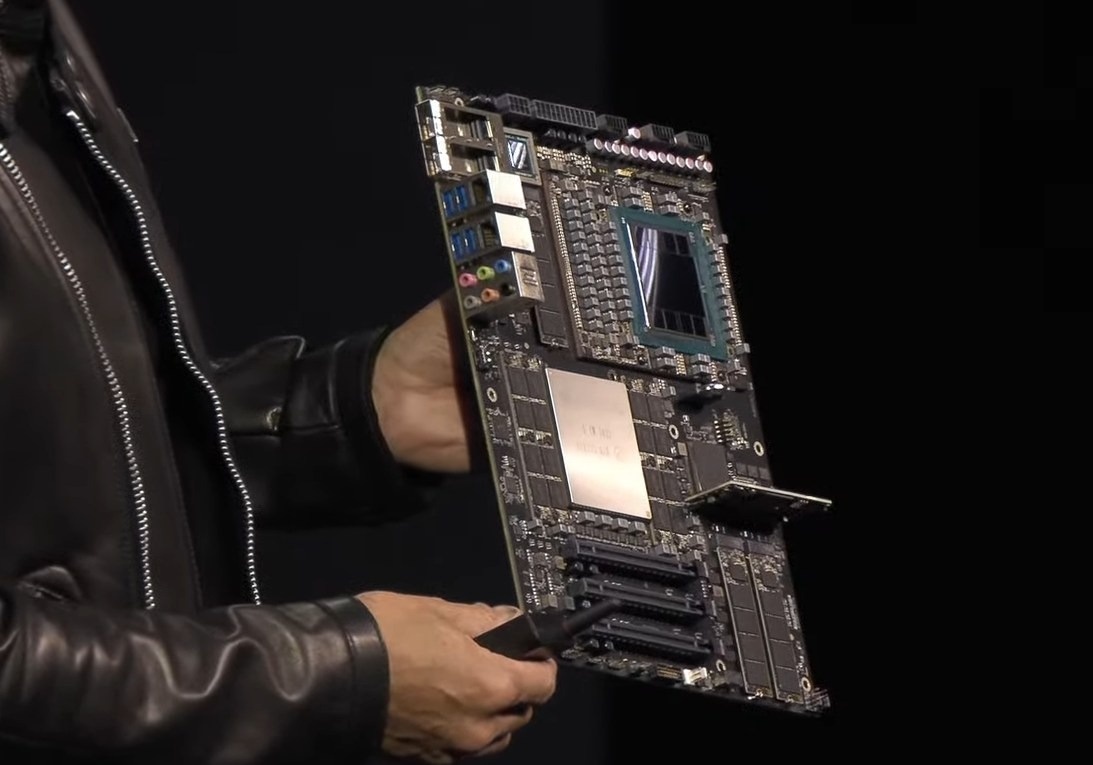

|

The DGX Station desktop motherboard integrates Nvidia's Blackwell Ultra GPU onboard. Photo: Nvidia. |

Meanwhile, the Rubin GPU promises to deliver outstanding performance, capable of managing 50 petaflops of inference, more than double the 20 petaflops of the current Blackwell chip. Rubin also supports up to 288 GB of fast memory, an important parameter for AI developers.

Another notable change is that Nvidia will redefine the concept of GPU with Rubin. Instead of considering a single chip assembled from multiple components as a GPU, Nvidia will refer to each component as a separate GPU when combined. This means that Rubin chips will have up to two GPUs on one chip.

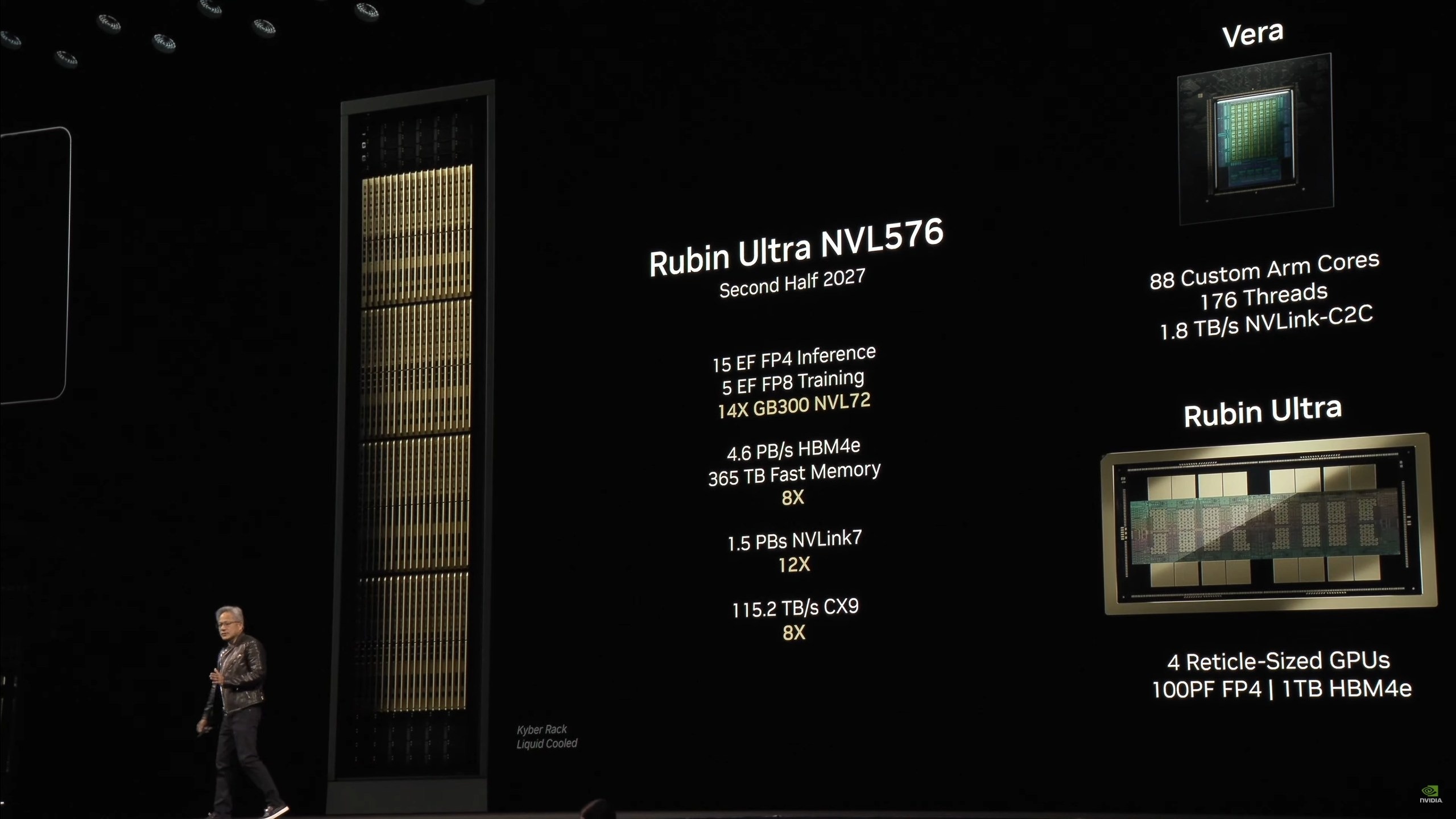

Finally, the world's largest GPU maker plans to launch Rubin Ultra (also known as Rubin Next) in the second half of 2027. Rubin Ultra will be a huge leap forward in performance, as a single chip will contain two Rubin GPUs connected together, delivering up to 100 petaflops of FP4 performance.

For comparison, this performance is double that of the Rubin GPU. In addition, the memory of Rubin Ultra is also significantly upgraded to 1 TB.

At the end of the presentation, Nvidia continued to reveal its long-term development roadmap when it said that the chip architecture after Vera Rubin, expected to launch in 2028, will be named Feynman after the famous theoretical physicist Richard Feynman.

|

Performance of the duo using the next-generation GPU architecture called Vera Rubin expected to launch in 2026 and 2027. Photo: Nvidia. |

The announcements come as Nvidia is benefiting from the AI boom. The company’s sales have increased sixfold since ChatGPT launched, thanks to Nvidia’s GPU dominance in AI development.

The demand for AI computing power is growing at a rapid pace, and CEO Jensen Huang believes the industry will need 100 times more computing power than predicted by 2024 to meet this demand.

One notable highlight at GTC 2025 was Nvidia’s close collaboration with leading AI companies, including China’s DeepSeek. Using DeepSeek’s R1 model to benchmark its new chips shows the semiconductor giant’s focus on performance in complex inference tasks.

Source: https://znews.vn/at-chu-bai-moi-cua-nvidia-da-lo-dien-post1539279.html

Comment (0)