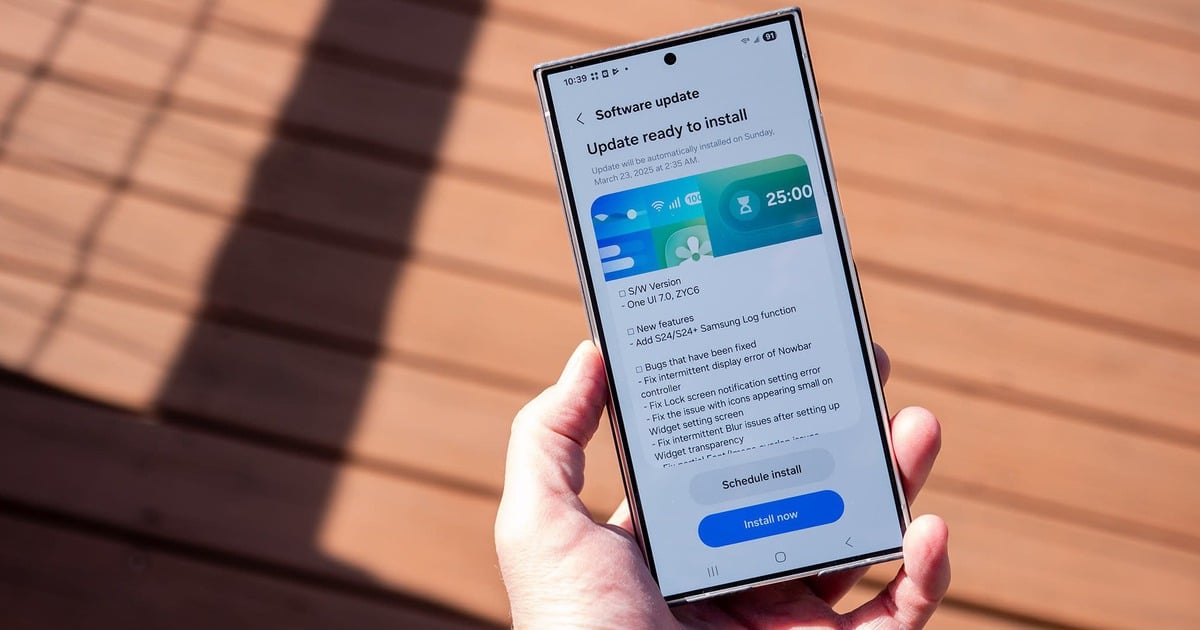

At the GTC 2025 conference held in San Jose (USA), Asus announced an upgraded version of the AI POD server system. With the integration of the Nvidia GB300 NVL72 platform, the system is equipped with 72 Blackwell Ultra GPUs and 36 Grace CPUs, providing higher performance in artificial intelligence (AI) applications.

Asus showcases next-generation AI POD system at GTC 2025, with Nvidia Blackwell GPU and liquid cooling technology

The new AI POD is designed in a rack-scale form factor, allowing flexible expansion and supporting up to 40TB of high-speed memory per rack. In addition, the system integrates NVIDIA Quantum-X800 InfiniBand and Spectrum-X Ethernet networking technology, improving data transmission speed between server clusters. Liquid cooling is also applied to ensure stable operation, especially during high-intensity computing tasks such as large language model (LLM) training.

In addition to the AI POD, Asus also launched a series of new AI servers in the Blackwell and HGX ecosystems, including the XA NB3I-E12, ESC NB8-E11, and ESC N8-E11V models. These products support a variety of fields such as data science, finance, healthcare, and synthetic AI. According to Asus, the expansion of the AI server portfolio gives businesses more options to suit their specific needs.

Asus says the new generation AI POD can meet the needs of large data centers and AI research organizations. The close collaboration with Nvidia on this project helps optimize the ability to operate and develop AI at scale.

Source: https://thanhnien.vn/asus-ra-mat-ai-pod-su-dung-chip-blackwell-gb300-tai-gtc-2025-18525031920230298.htm

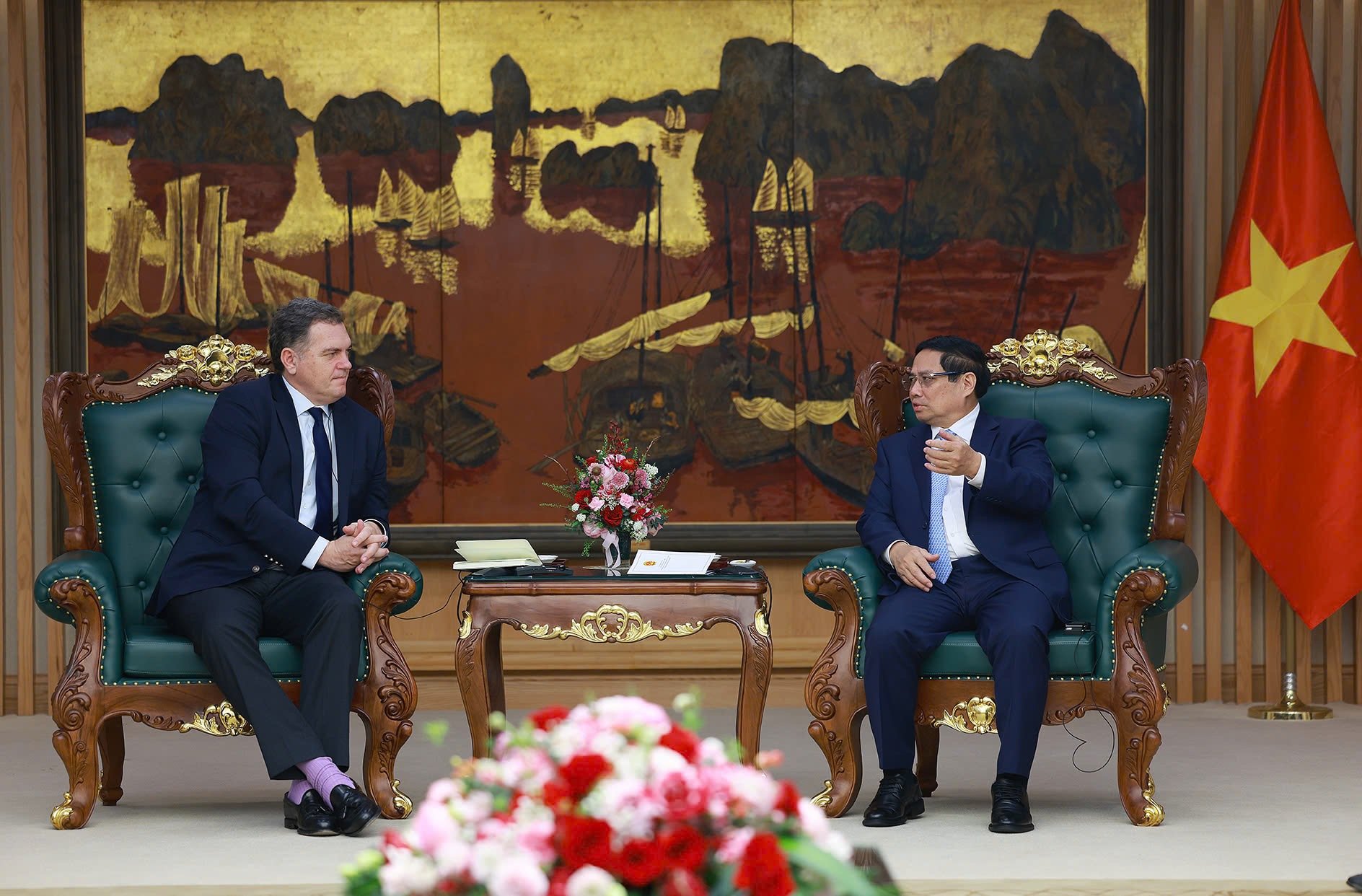

![[Photo] Prime Minister Pham Minh Chinh receives the head of the Republic of Tatarstan, Russian Federation](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/7877cb55fc794acdb7925c4cf893c5a1)

![[Photo] Prime Minister Pham Minh Chinh receives French Minister in charge of Transport](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/aa649691f85546d59c3624b1821ab6e2)

![[Photo] Overview of the Workshop "Removing policy shortcomings to promote the role of the private economy in the Vietnamese economy"](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/d1c58c1df227467b8b33d9230d4a7342)

![[Photo] Meet the pilots of the Victory Squadron](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/3/21/fd30103acbd744b89568ca707378d532)

Comment (0)