OpenAI hasn’t revealed much about how ChatGPT-4 was trained. But large language models (LLMs) are typically trained on text scraped from the internet, where English is the lingua franca. About 93% of ChatGPT-3’s training data was in English.

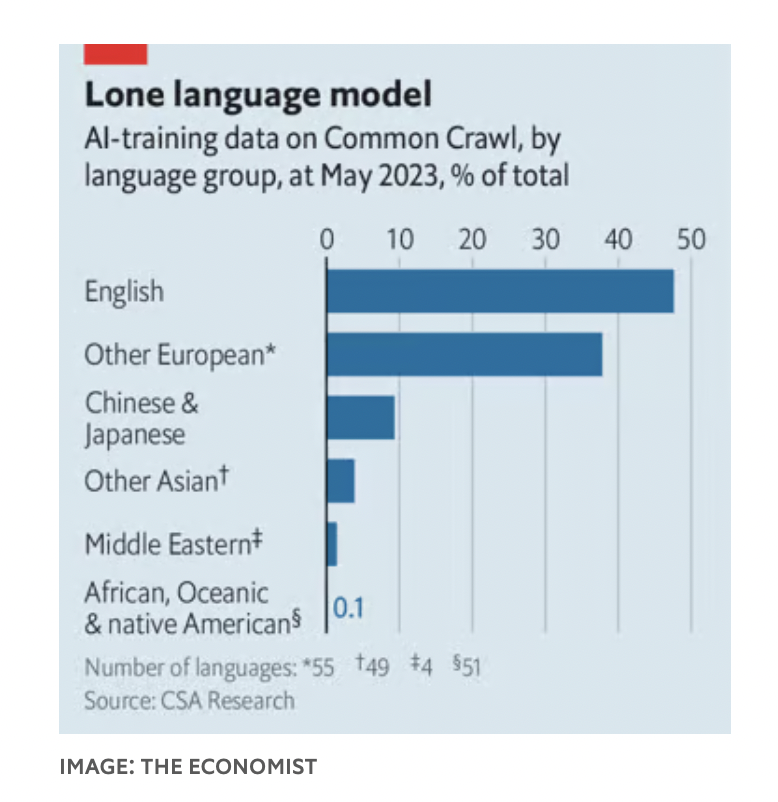

In Common Crawl, just one of the datasets on which the AI model was trained, English makes up 47% of the corpus, with other European languages making up a further 38%. By contrast, Chinese and Japanese combined make up just 9%.

This is not a problem limited to ChatGPT alone, as Nathaniel Robinson, a researcher at Johns Hopkins University, and his colleagues found. All LLMs performed better on “high-resource” languages, where training data was plentiful, than on “low-resource” languages, where they were scarce.

This is a problem for those hoping to bring AI to poor countries to improve everything from education to healthcare. So researchers around the world are working to make AI more multilingual.

Last September, the Indian government launched a chatbot that helps farmers stay updated with useful information from the government.

Shankar Maruwada of the EkStep Foundation, the nonprofit that helped build the chatbot, said the bot works by combining two types of language models, allowing users to submit queries in their native language. These native language queries are passed to machine translation software at an Indian research facility, which translates them into English before forwarding the response to LLM, which processes the response. Finally, the response is translated back into the user's native language.

This process may work, but translating queries into LLM’s “preferred” language is a clumsy workaround. Language is a reflection of culture and worldview. A 2022 paper by Rebecca Johnson, a researcher at the University of Sydney, found that ChatGPT-3 produced answers on topics like gun control and refugee policy that were comparable to American values expressed in the World Values Survey.

As a result, many researchers are trying to make LLMs fluent in less-used languages. Technically, one approach is to modify the tokenizer for the language. An Indian startup called Sarvam AI has written a tokenizer optimized for Hindi, or OpenHathi model - LLM optimized for Devanagari (Indian) language that can significantly reduce the cost of answering questions.

Another way is to improve the datasets on which LLM is trained. In November, a team of researchers at Mohamed bin Zayed University in Abu Dhabi released the latest version of their Arabic-speaking model, called “Jais.” It has one-sixth the number of parameters of ChatGPT-3, but performs on par with Arabic.

Timothy Baldwin, president of Mohamed bin Zayed University, noted that although his team digitized a lot of Arabic text, some English text was still included in the model. Some concepts are the same in all languages and can be learned in any language.

The third approach is to fine-tune the models after they have been trained. Both Jais and OpenHathi have a number of human-generated question-answer pairs. The same goes for Western chatbots, to prevent misinformation.

Ernie Bot, an LLM from Baidu, a major Chinese tech company, has been tweaked to limit speech that could offend the government. The models can also learn from human feedback, with users rating the LLM’s answers. But that’s difficult to do for many languages in less developed regions because of the need to hire qualified people to critique the machine’s responses.

(According to Economist)

Source

![[Photo] Closing of the 11th Conference of the 13th Central Committee of the Communist Party of Vietnam](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/114b57fe6e9b4814a5ddfacf6dfe5b7f)

![[Photo] Overcoming all difficulties, speeding up construction progress of Hoa Binh Hydropower Plant Expansion Project](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/bff04b551e98484c84d74c8faa3526e0)

Comment (0)