Nvidia announced a slew of new products at its developer event on March 18 to solidify its position in the artificial intelligence (AI) market. The chipmaker’s stock price has increased 54-fold and revenue has more than tripled since ChatGPT kicked off the global AI race in late 2022. Nvidia’s high-end server GPUs are critical for training and deploying large language models, with companies like Microsoft and Meta spending billions of dollars on the chips.

Nvidia calls its next-generation AI chip Blackwell. The first Blackwell chip, the GB200, will be available later this year. Nvidia is offering customers more powerful chips to spur new orders. Customers are still scrambling to get their hands on the H100 Hopper chip.

“Hopper is great, but we need bigger GPUs,” Nvidia CEO Jensen Huang said at the event.

Along with the Blackwell chip, Nvidia also introduced NIM software that makes it easier to deploy AI. Nvidia officials say the company is becoming more of a platform provider like Apple and Microsoft than a chip supplier.

“Blackwell is not a chip, it’s the name of a platform,” said Huang. Nvidia Vice President Manuvir Das promised that NIM software would help developers run programs on any Nvidia GPU, old or new, to reach more people.

Blackwell, Hopper's "successor"

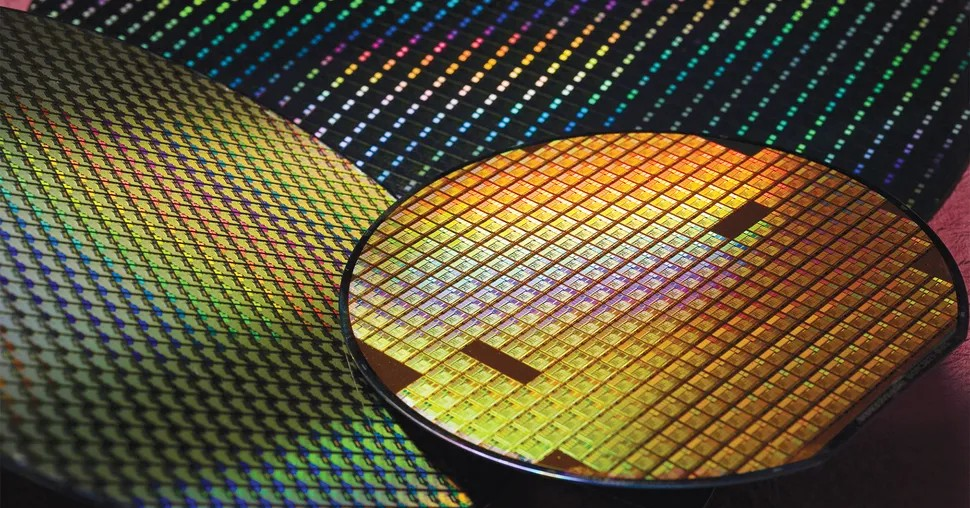

Every two years, Nvidia updates its GPU architecture, unlocking new performance gains. Many AI models released last year were trained on the Hopper architecture, which will be available starting in 2022.

Nvidia says Blackwell-based chips like the GB200 offer a significant performance boost for AI businesses, delivering 20 petaflops compared to 4 petaflops on the H100. This processing power allows AI businesses to train larger and more complex models.

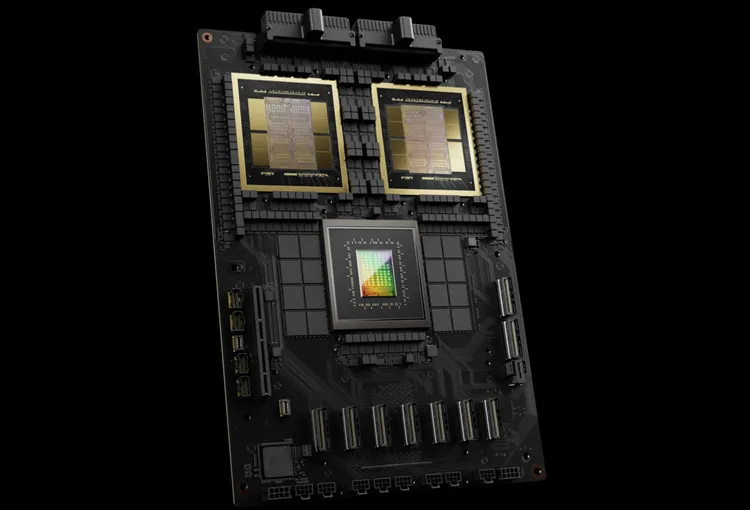

The Blackwell GPU is large and combines two separately manufactured dies into a single chip. It's also available as a complete server called the GB200 NVLink 2, which combines 72 Blackwell GPUs and other Nvidia parts designed to train AI models.

Amazon, Google, Microsoft, and Oracle will sell access to the GB200 through cloud services. The GB200 combines two B200 Blackwell GPUs with a Grace CPU. Nvidia said Amazon Web Services (AWS) will build a server cluster with 20,000 GB200 chips.

The system can run a 27 trillion parameter model, much larger than the largest models available today, such as GPT-4 (1.7 trillion parameters). Many AI researchers believe that larger models with more parameters and data could unlock new capabilities.

Nvidia has not announced pricing for the new GB200 or systems that include the GB200. Nvidia’s Hopper-based H100 costs between $25,000 and $40,000 per unit, and the entire system costs as much as $200,000, according to analyst estimates.

(According to CNBC)

Source

![[Photo] Overcoming all difficulties, speeding up construction progress of Hoa Binh Hydropower Plant Expansion Project](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/bff04b551e98484c84d74c8faa3526e0)

Comment (0)