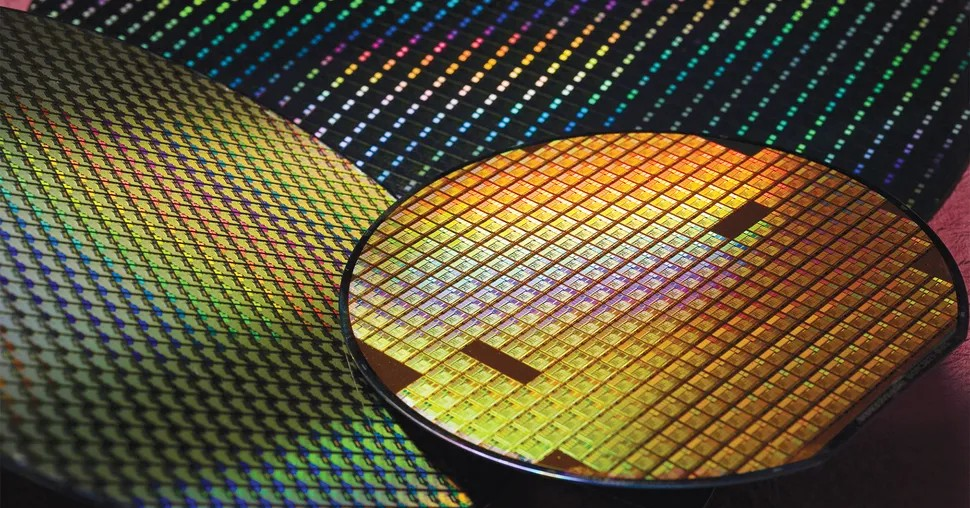

According to the Financial Times , Baidu, ByteDance, Tencent and Alibaba spent $1 billion to buy about 100,000 A800 processors from Nvidia. Previously, Chinese companies bought $4 billion worth of graphics chips (GPUs) for delivery in 2024.

The A800 is a weaker version of Nvidia's A100. Due to Washington's 2022 regulations, Chinese companies can only buy the A800 chip, which has slower data transfer speeds.

As AI takes the world by storm, Nvidia’s GPUs have become the hottest commodity because they provide the computing power to develop large-scale language models. Chinese tech giants are racing to stockpile A800 chips amid concerns that the Biden administration will impose new export restrictions that could affect Nvidia’s lower-end chips, as well as a GPU shortage due to oversupply.

On August 9, Washington announced a new ban that will take effect next year on some US investments in Chinese high-tech sectors, including quantum computing, advanced chips and AI. “Without Nvidia chips, we can’t train any major language models,” said an anonymous Baidu employee.

Companies are developing their own large language models following the success of ChatGPT, a chatbot launched by OpenAI eight months ago. Several small teams within ByteDance are working on various generative AI products, including the Grace chatbot, according to the source.

Earlier this year, ByteDance tested a generative AI feature for TikTok—TikTok Tako—using OpenAI’s ChatGPT. Two employees said the company has stockpiled at least 10,000 Nvidia GPUs to support its ambitions. ByteDance has also ordered nearly 70,000 A8 chips worth about $700 million for next year.

Meanwhile, Alibaba wants to bring big language models to all its products, including its Taobao online shopping platform and its Gaode Map mapping tool. Baidu has a similar project to ChatGPT called Ernie Bot.

Consumer Internet companies and cloud service providers invest billions of dollars in data center components each year, often placing orders months in advance, according to Nvidia.

At the start of 2023, most Chinese internet giants did not have large inventories of chips used to train large language models. As AI demand grew, chip prices rose. One Nvidia distributor said the price of the A800 had increased by more than 50%.

(According to Financial Times)

Source

![[Photo] Closing of the 11th Conference of the 13th Central Committee of the Communist Party of Vietnam](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/114b57fe6e9b4814a5ddfacf6dfe5b7f)

![[Photo] Overcoming all difficulties, speeding up construction progress of Hoa Binh Hydropower Plant Expansion Project](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/bff04b551e98484c84d74c8faa3526e0)

Comment (0)