|

OpenAI's chatbot is getting better with new technologies. Photo: New York Times . |

In September 2024, OpenAI released a version of ChatGPT that integrates the o1 model, which can reason on tasks related to mathematics, science, and computer programming.

Unlike the previous version of ChatGPT, the new technology will take time to “think” about solutions to complex problems before giving a response.

After OpenAI, many competitors such as Google, Anthropic and DeepSeek also introduced similar reasoning models. Although not perfect, this is still a chatbot upgrade technology that many developers trust.

How AI Reasons

Basically, reasoning means that the chatbot can spend more time solving the problem presented by the user.

“Reasoning is how the system does more work after it gets a question,” Dan Klein, a professor of computer science at the University of California, Berkeley, told the New York Times .

The reasoning system can break down a problem into individual steps, or solve it through trial and error.

When it first launched, ChatGPT could answer questions instantly by extracting and synthesizing information. Meanwhile, reasoning systems needed a few more seconds (or even minutes) to solve the problem and respond.

|

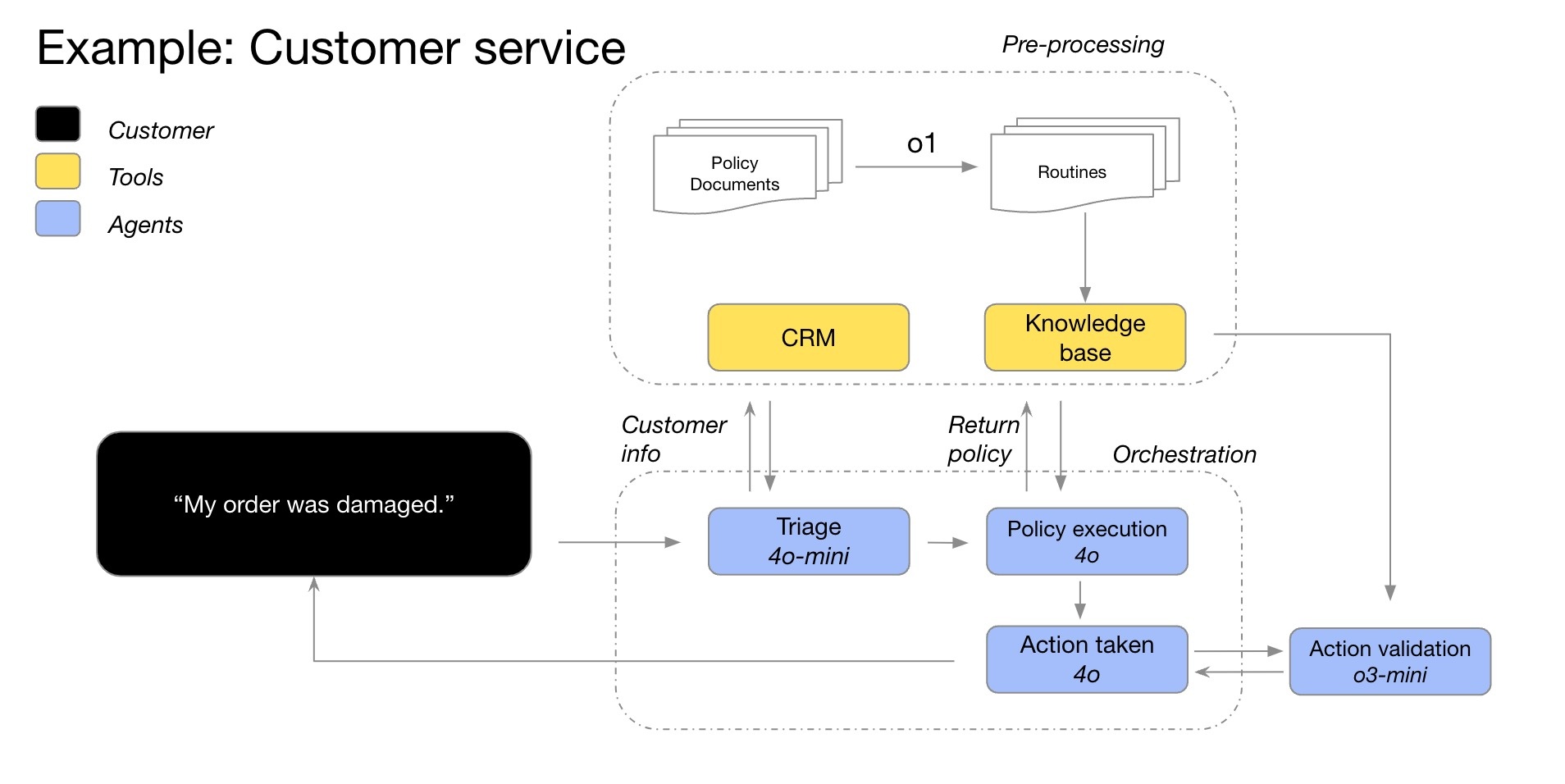

Example of the reasoning process of the o1 model in a customer care chatbot. Photo: OpenAI . |

In some cases, the reasoning system will change its approach to the problem, continually improving the solution. Alternatively, the model may try multiple solutions before settling on the optimal choice, or test the accuracy of previous responses.

In general, the reasoning system will consider all possible answers to the question. This is similar to elementary school students, who write down many options on paper before choosing the most suitable way to solve a math problem.

According to the New York Times , AI is now capable of reasoning about any topic. However, the task will be most effective with questions related to math, science, and computer programming.

How is the theoretical system trained?

On a regular chatbot, users can still ask for an explanation of the process or check the correctness of a response. In fact, many ChatGPT training datasets already include a problem-solving process.

A reasoning system goes even further when it can perform an action without being asked by the user. This process is more complex and far-reaching. Companies use the term “reasoning” because the system works in a similar way to human thinking.

Many companies like OpenAI are betting that reasoning systems are the best way to improve chatbots today. For years, they believed that chatbots would work better if they were trained on as much information as possible on the internet.

By 2024, AI systems will have consumed nearly all the text available on the internet. That means companies will need to find new solutions to upgrade chatbots, including reasoning systems.

|

Startup DeepSeek once "caused a stir" with a reasoning model that cost less than OpenAI. Photo: Bloomberg . |

Since last year, companies like OpenAI have focused on a technique called reinforcement learning, a process that typically takes several months and involves AI learning behavior through trial and error.

For example, by solving thousands of problems, the system can learn the optimal method for getting the right answer. From there, the researchers built complex feedback mechanisms that help the system learn the right and wrong solutions.

“It’s like training a dog. If it works well, you give it a treat. If it doesn’t work, you say, ‘That dog is bad,’” said Jerry Tworek, a researcher at OpenAI.

Is AI the future?

According to the New York Times , reinforcement learning works well with math, science, and computer programming questions, where there are clearly defined right or wrong answers.

By contrast, reinforcement learning doesn’t work well in writing, philosophy, or ethics, where it’s hard to distinguish between good and bad. But the researchers say the technique can still improve AI performance, even on questions outside of math.

“Systems will learn the paths that lead to positive and negative outcomes,” said Jared Kaplan, Chief Science Officer at Anthropic.

|

Website of Anthropic, the startup that owns the AI model Claude. Photo: Bloomberg . |

It is important to note that reinforcement learning and reasoning systems are two different concepts. Specifically, reinforcement learning is a method of building reasoning systems. This is the final training stage for chatbots to have reasoning capabilities.

Because they’re still relatively new, scientists can’t be sure whether reasoning chatbots or reinforcement learning can help AI think like humans. It’s worth noting that many current AI training trends develop very quickly in the beginning and then slow down.

Furthermore, reasoning chatbots can still make mistakes. Based on probability, the system will choose the process that is most similar to the data it learned, whether it comes from the Internet or through reinforcement learning. Therefore, chatbots can still choose the wrong or unreasonable solution.

Source: https://znews.vn/ai-ly-luan-nhu-the-nao-post1541477.html

![[Photo] Visiting Cu Chi Tunnels - a heroic underground feat](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/8/06cb489403514b878768dd7262daba0b)

![[Photo] National Assembly Chairman successfully concludes official visit to Uzbekistan](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/9/8a520935176a424b87ce28aedcab6ee9)

Comment (0)