According to BGR , a new research report has just published an alarming technique called 'Fun-Tuning', when using AI (artificial intelligence) itself to automatically create extremely effective prompt injection attacks targeting other advanced AI models, including Google's Gemini.

The method makes 'cracking' AI faster, cheaper and easier than ever, marking a new escalation in the cybersecurity battle involving AI.

The danger when bad guys use AI to break AI

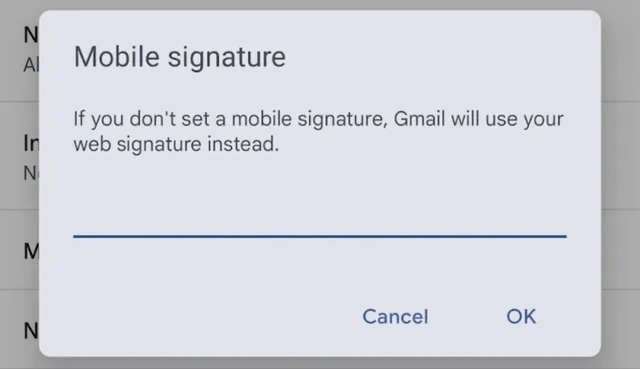

Prompt injection is a technique in which an adversary sneaks malicious instructions into the input data of an AI model (e.g., via comments in source code, hidden text on the web). The goal is to 'trick' the AI into bypassing pre-programmed safety rules, leading to serious consequences such as leaking sensitive data, providing false information, or performing other malicious actions.

Hackers are using AI to attack AI

PHOTO: LINKEDIN SCREENSHOT

Previously, successfully executing these attacks, especially on 'closed' models like Gemini or GPT-4, often required a lot of complex and time-consuming manual testing.

But Fun-Tuning has changed the game entirely. Developed by a team of researchers from multiple universities, the method cleverly exploits the very tuning application programming interface (API) that Google provides for free to Gemini users.

By analyzing the subtle reactions of the Gemini model during tuning (e.g. how it responds to errors in the data), Fun-Tuning can automatically determine the most effective 'prefixes' and 'suffixes' to hide a malicious command. This significantly increases the likelihood that the AI will comply with the attacker's malicious intentions.

Test results show that Fun-Tuning achieves a success rate of up to 82% on some versions of Gemini, a figure that surpasses the less than 30% of traditional attack methods.

Adding to the danger of Fun-Tuning is its low cost. Since Google’s tuning API is freely available, the computational cost of creating an effective attack can be as low as $10. Furthermore, the researchers found that an attack designed for one version of Gemini could easily be successfully applied to other versions, opening up the possibility of widespread attacks.

Google has confirmed that it is aware of the threat posed by Fun-Tuning, but has not yet commented on whether it will change how the tuning API works. The researchers also point out the defensive dilemma: removing the information that Fun-Tuning exploits from the tuning process would make the API less useful to legitimate developers. Conversely, leaving it as it is would continue to be a springboard for bad actors to exploit.

The emergence of Fun-Tuning is a clear warning that the confrontation in cyberspace has entered a new, more complex phase. AI is now not only a target but also a tool and weapon in the hands of malicious actors.

Source: https://thanhnien.vn/hacker-dung-ai-de-tan-cong-gemini-cua-google-18525033010473121.htm

![[Photo] Looking back at the impressive moments of the Vietnamese rescue team in Myanmar](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/11/5623ca902a934e19b604c718265249d0)

![[Photo] "Beauties" participate in the parade rehearsal at Bien Hoa airport](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/11/155502af3384431e918de0e2e585d13a)

![[Photo] Summary of parade practice in preparation for the April 30th celebration](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/11/78cfee0f2cc045b387ff1a4362b5950f)

Comment (0)