As the race to develop artificial intelligence (AI) becomes increasingly fierce, Anthropic emerges as a company with a distinct mission: Building artificial general intelligence (AGI) that is not only powerful, but also safe and ethical.

Founded by former OpenAI executives Dario and Daniela Amodei, Anthropic is not just about performance. The AI startup is focused on ensuring that artificial intelligence will bring real benefits to humanity, rather than posing unpredictable risks.

Unique approach

The birth of Anthropic stemmed from deep concerns about the direction of the AI industry, especially at OpenAI. Dario Amodei, then vice president of research at the creator of ChatGPT, saw that safety was not being prioritized enough in the race to develop AI at a rapid pace.

Dario Amodei, co-founder and mission officer of Anthropic. Photo: Wired. |

Leaving OpenAI, Amodei founded Anthropic with one of the pillars of its development philosophy being "constitutional AI".

Specifically, instead of relying on rigid pre-programmed rules, Anthropic equips its AI models, typically Claude, with the ability to self-evaluate and adjust their behavior based on a set of carefully selected ethical principles from many different sources.

In other words, the system allows Claude to make decisions that are consistent with human values even in complex and unprecedented situations.

In addition, Anthropic has developed a “Responsible Scaling Policy,” a tiered risk assessment framework for AI systems. This policy helps the company closely monitor the development and deployment of AI, ensuring that potentially more dangerous systems are only activated when robust and reliable safeguards have been established.

Logan Graham, who leads Anthropic's security and privacy efforts , explained to Wired that his team is constantly testing new models to find potential vulnerabilities. Engineers then tweak the AI model until it meets Graham's criteria.

The Claude large language model plays a central role in everything Anthropic does. Not only is it a powerful research tool, helping scientists explore the mysteries of AI, but it is also widely used internally within the company for tasks like writing code, analyzing data, and even drafting internal newsletters.

The Dream of Ethical AI

Dario Amodei is not only focused on preventing the potential risks of AI, but also dreams of a bright future where AI will act as a positive force, solving humanity's most intractable problems.

Benchmark scores of the Claude 3.5 Sonnet compared to some other models. Photo: Anthropic. |

The Italian-American researcher even believes that AI has the potential to bring about huge breakthroughs in medicine, science and many other fields, especially the possibility of extending human life expectancy up to 1,200 years.

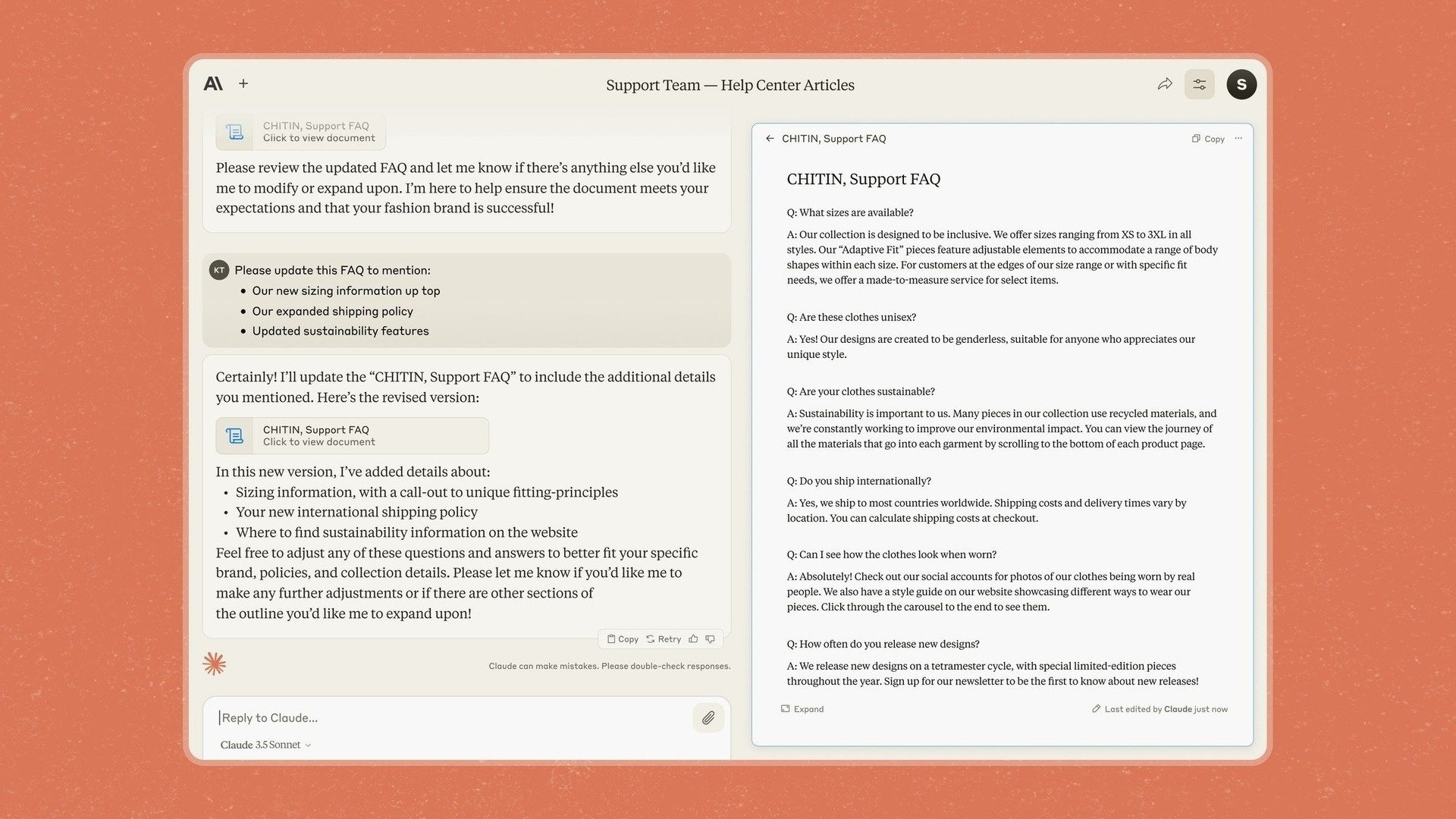

That's also why Anthropic introduced Artifacts in Claude 3.5 Sonnet, a feature that allows users to edit and add content directly to chatbot responses instead of having to copy it to another application.

Having previously stated its focus on businesses, Anthropic said that with its new model and tools, it wants to turn Claude into an app that allows companies to “securely bring knowledge, documents, and work into shared spaces.”

However, Anthropic is also well aware of the challenges and potential risks on the road to realizing this dream. One of the biggest concerns is the potential for “fake compliance” by AI models like Claude.

Specifically, the researchers found that in certain situations, Claude could still behave in a "fake" way to achieve his goals, even when it went against pre-designed moral principles.

Artifacts feature on chatbot Claude. Photo: Anthropic. |

“In situations where the AI thinks there is a conflict of interest with the company the AI is training it on, it will do really bad things,” one researcher described the situation.

This shows that ensuring that AI always acts in the best interests of humans is a complex task and requires constant monitoring.

Amodei himself has likened the urgency of AI safety to a “Pearl Harbor,” suggesting that it may take a major event for people to truly realize the seriousness of the potential risks.

“We have figured out the basic formula for making models smarter, but we haven’t figured out how to make them do what we want,” said Jan Leike, a security expert at Anthropic.

Source: https://znews.vn/nguoi-muon-tao-ra-tieu-chuan-dao-duc-moi-cho-ai-post1541798.html

![[Photo] "Beauties" participate in the parade rehearsal at Bien Hoa airport](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/11/155502af3384431e918de0e2e585d13a)

Comment (0)