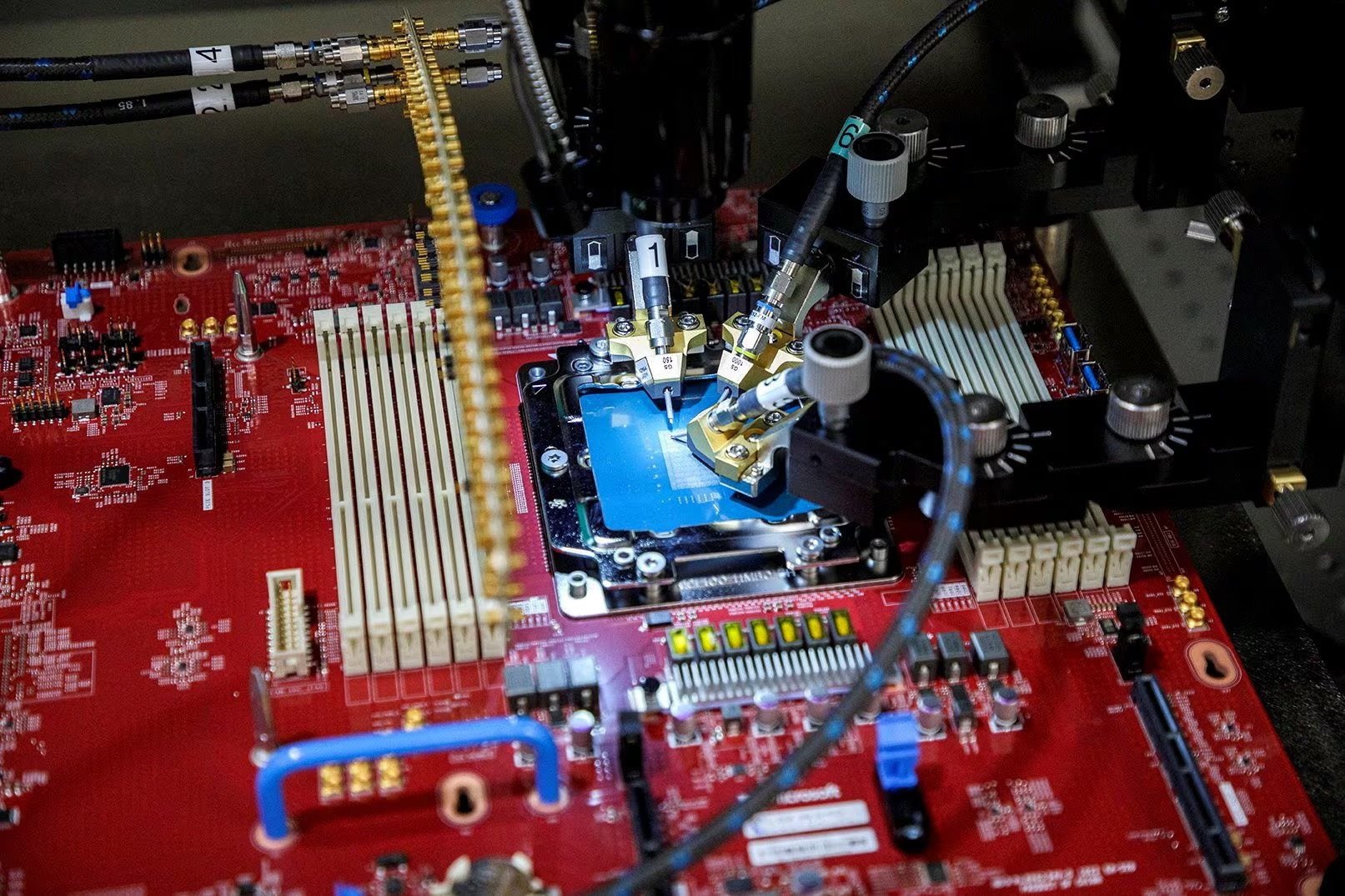

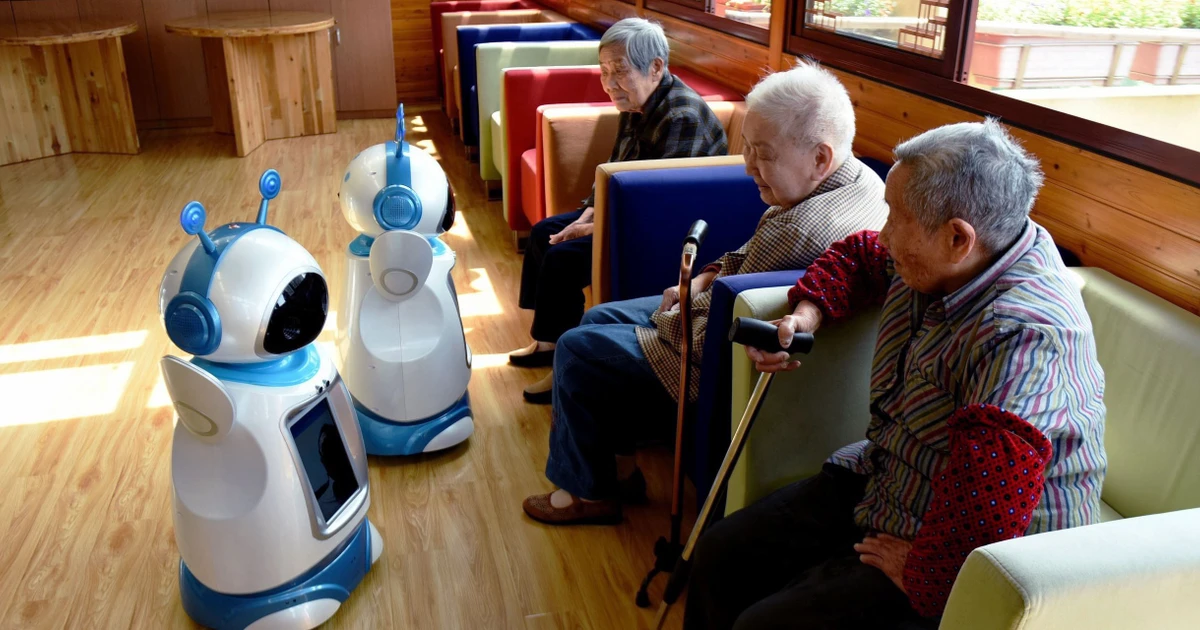

The Windows giant says it has no plans to commercialize the chips. Instead, the new AI chips will be used internally for its software products, as well as part of its Azure cloud computing service.

Solutions to rising costs

Microsoft and other tech giants like Alphabet (Google) are grappling with the high cost of providing AI services, which can be more than 10 times that of traditional services like search engines.

Microsoft executives say they plan to address the rising cost of AI by using a common platform model to deeply integrate AI into the entire software ecosystem. And the Maia chip is designed to do just that.

The Maia chip is designed to run large language models (LLMs), the foundation for the Azure OpenAI service, a collaboration between Microsoft and ChatGPT's owner.

“We think this gives us a way to be able to deliver better solutions to our customers at a faster pace, at a lower cost, and with higher quality,” said Scott Guthrie, executive vice president of Microsoft’s cloud and AI division.

Microsoft also said that next year it will offer Azure customers cloud services running on the latest leading chips from Nvidia and Advanced Micro Devices (AMD). The corporation is currently testing GPT-4 on AMD chips.

Increasing competition in the cloud sector

The second chip, codenamed Cobalt, was launched by Microsoft to save internal costs and compete with Amazon's AWS cloud service, which uses its own self-designed "Graviton" chip.

Cobalt is an Arm-based central processing unit (CPU) currently being tested to power the Teams enterprise messaging software.

AWS says its Graviton chip currently has about 50,000 customers, and the company will host a developer conference later this month.

"AWS will continue to innovate to deliver future generations of custom-designed chips that deliver even better price-performance, for any workload customers require," AWS said in a statement after Microsoft announced the AI chip duo.

Rani Borkar, corporate vice president of Azure hardware and infrastructure, said both new chips are manufactured on TSMC's 5nm process.

In it, Maia is paired with standard Ethernet cables, rather than using the more expensive custom Nvidia networking technology that Microsoft has used in the supercomputers it built for OpenAI.

(According to Reuters)

MediaTek Launches Mobile AI Chip That Can Write Poetry, Create Images Without the Internet

Mobile chip designer MediaTek has just launched the Dimensity 9300 5G chipset with an integrated AI processor (also known as an APU), compatible with generative AI tasks such as generating images from text prompts without an Internet connection.

Nvidia faces immediate export ban on certain AI chips

The US government is demanding that Nvidia immediately stop exporting certain chips without a license from the Commerce Department.

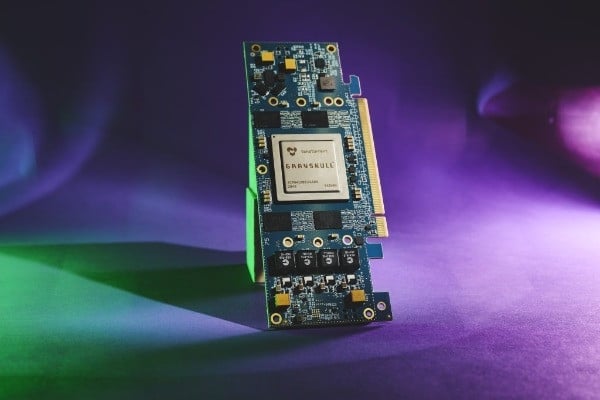

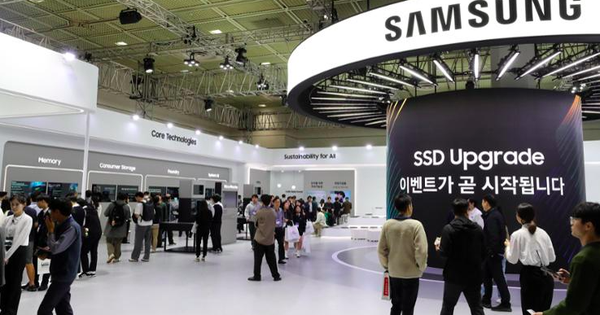

AI chip 'unicorn' joins hands with Samsung, challenging Nvidia

Tenstorrent, a Canadian-based billion-dollar AI chip startup, has just reached an agreement to use Samsung's 4-nanometer (nm) microprocessor manufacturing technology.

Source

![[Photo] General Secretary To Lam receives French Ambassador to Vietnam Olivier Brochet](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/17/49224f0f12e84b66a73b17eb251f7278)

![[Photo] National Assembly Chairman Tran Thanh Man meets with outstanding workers in the oil and gas industry](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/17/1d0de4026b75434ab34279624db7ee4a)

![[Photo] Closing of the 4th Summit of the Partnership for Green Growth and the Global Goals](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/17/c0a0df9852c84e58be0a8b939189c85a)

![[Photo] Nhan Dan Newspaper announces the project "Love Vietnam so much"](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/17/362f882012d3432783fc92fab1b3e980)

![[Photo] Welcoming ceremony for Chinese Defense Minister and delegation for friendship exchange](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/17/fadd533046594e5cacbb28de4c4d5655)

![[Photo] Promoting friendship, solidarity and cooperation between the armies and people of the two countries](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/17/0c4d087864f14092aed77252590b6bae)

![[Video] Viettel officially puts into operation the largest submarine optical cable line in Vietnam](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/17/f19008c6010c4a538cc422cb791ca0a1)

Comment (0)