Online fraudsters not only impersonate relatives, police officers, etc., but also manipulate artificial intelligence (AI) tools to create hundreds of scam scenarios to attack users.

Cybersecurity expert Ngo Minh Hieu said that fraudsters manipulate AI, creating hundreds of fraud scenarios in minutes - Photo: VU TUAN

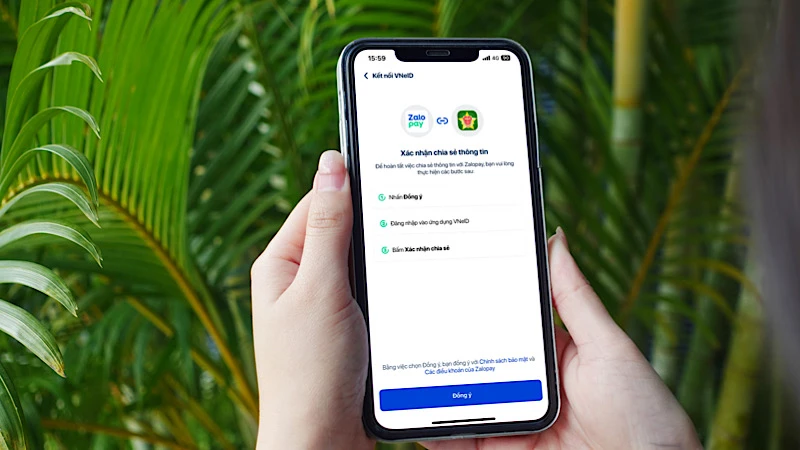

According to social enterprise Chongluadao.vn, fraudsters have used AI to generate malware, write scam scripts, and transform sounds and images through deepfake.

According to cybersecurity expert Ngo Minh Hieu (Hieu PC) - representative of Chongluadao.vn, a dangerous trick is to "trick AI" into loading malware. "They create fake audio or image files, embedding malware that the AI does not recognize. When the AI system processes it, the malware activates and takes control," said Hieu PC.

For example, he used AI to fake voices and images of relatives via FaceTime to trick people into transferring money.

Recently, the frequency of fraud has increased significantly due to the support of AI tools. Controlling AI, using AI to commit fraud, fraudsters overcome all language and geographical barriers. The forms of fraud are becoming more sophisticated and dangerous.

Expert Hieu PC analyzed that no matter what tool is used to commit fraud, cyber criminals always have a scenario. This is the information his team of associates drew from receiving and processing hundreds of online fraud reports.

Common forms of fraud are impersonating relatives, impersonating employees of state agencies, police, electricity companies... More sophisticated are scenarios that lure victims into investment traps, performing tasks or dating...

Cybersecurity experts say that the first thing to do to avoid having your image faked is not to share your personal images on social networks in public mode. Calls and messages asking for money transfers, clicking on links, or providing OTP codes are 99% scams.

Hacker's Tricks to Attack AI

According to cybersecurity experts, an "adversarial attack" is a trick that hackers use to "trick" AI. This is a form of fake information that makes AI misunderstand or be exploited. As a result, the AI injects malicious code into the system or carries out the commands given by the fraudster.

Fraudsters exploit this weakness to bypass AI, especially AI protection systems (such as antivirus software, voice recognition, or banking transaction checks).

Source: https://tuoitre.vn/lua-dao-mang-lua-ca-ai-tao-kich-ban-thao-tung-tam-ly-20250228163856719.htm

![[Video] Approval of the Master Plan for the Construction of a High-Tech Forestry Zone in the North Central Region](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/12/93e860e3957940afaaab993c7f88571c)

![[Photo] "Beauties" participate in the parade rehearsal at Bien Hoa airport](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/11/155502af3384431e918de0e2e585d13a)

Comment (0)