In May 2024, world-famous technology giants followed each other in launching new versions of artificial intelligence (AI), such as OpenAI with GPT-4o, Google with Gemini 1.5 Pro... with many "super smart" features added to optimize user experience. However, it is undeniable that this technology is being used by cybercriminals with many online fraud scenarios.

Increasing risk

Speaking at a recent workshop, Deputy Minister of Information and Communications Pham Duc Long pointed out the fact that cyber attacks are constantly increasing with increasing sophistication and complexity. In particular, with the support of AI, the risks that users face will increase exponentially. "AI technology is being used by cybercriminals to easily create new malware, new and sophisticated phishing attacks..." - Deputy Minister Pham Duc Long warned.

According to a report by the Department of Information Security - Ministry of Information and Communications, cyber security risks related to AI have caused more than 1 quadrillion USD in damage worldwide, of which 8,000 - 10,000 billion VND in Vietnam alone. The most common use today is the use of AI to fake voices and faces for fraud purposes. It is predicted that by 2025 there will be about 3,000 attacks/second, 12 malware/second and 70 new vulnerabilities and weaknesses/day.

According to Mr. Nguyen Huu Giap, Director of BShield - specializing in security support for applications, creating fake images and voices is not difficult with the advancement of AI. Fraudsters can easily collect user data from public information they post on social networks or through tricks such as online recruitment interviews, phone calls under the name of "authorities". In a simulation conducted by BShield, from the available face through a video call, fraudsters can put them on the CCCD, graft them onto the body of a moving person to fool the eKYC tool and be recognized by the system as a real person.

Fraudulent methods using deepfake technology are increasing and becoming more sophisticated. Photo: LE TINH

From the user's perspective, Mr. Nguyen Thanh Trung, an IT specialist in Ho Chi Minh City, is concerned that bad guys can take advantage of chatGPT to create fraudulent emails with content and writing style similar to real emails from banks or reputable organizations. These emails are likely to be attached with malware, if users click on them, their data will definitely be stolen and their assets will be appropriated. "AI software is increasingly improved, it can create fake videos with faces and voices that are 95% similar to real people, gestures change in real time, making it very difficult for viewers to detect" - Mr. Trung said.

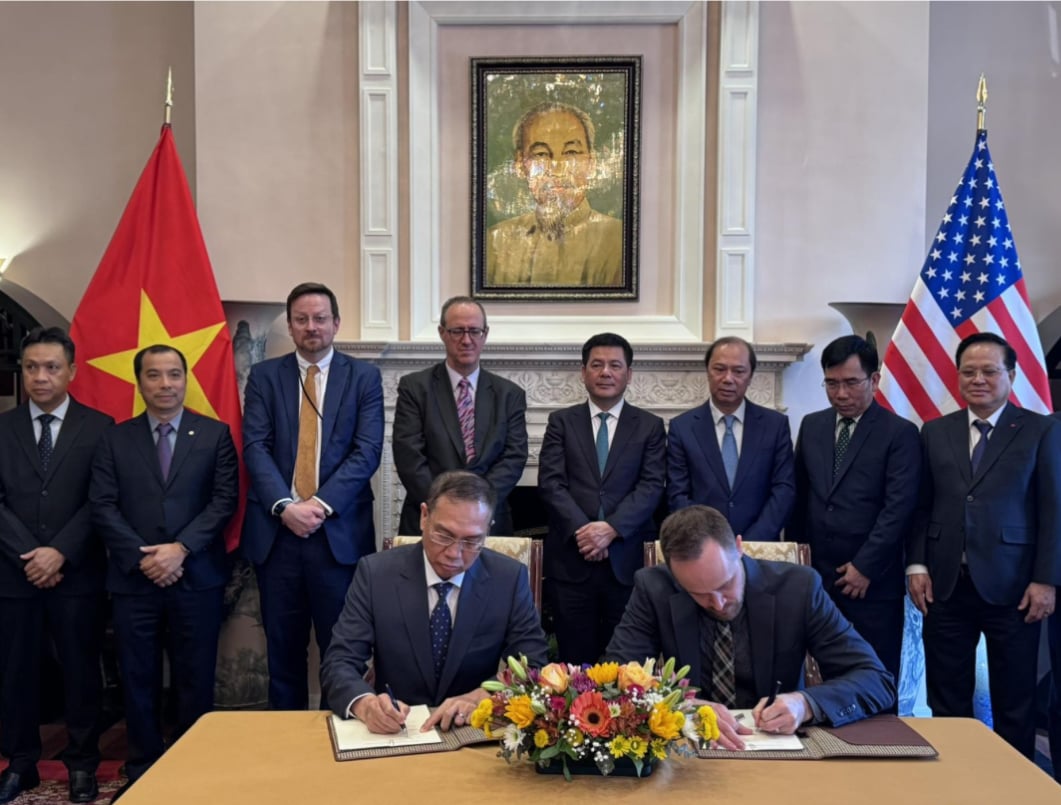

Complete legal corridor soon

To limit the risk of high-tech fraud, security expert Pham Dinh Thang noted that users need to regularly update their knowledge about AI and not click on links of unknown origin. For businesses, they should allocate a budget to ensure data security and system operations. Business personnel also need to be trained in depth to access actual vulnerabilities occurring in the system, increasing the ability to identify types of cyber attacks.

Mr. Ta Cong Son, Head of AI Development Department - Anti-Fraud Project, said that AI has been deeply embedded in the lives of users and the operations of businesses, agencies and units thanks to its extremely intelligent support features. Notably, AI technology is now very accessible and easy to use, which will create conditions for bad actors to easily take advantage of it for fraud purposes. "Fraudsters also constantly change their attack methods, users must update information regularly to know how to prevent it" - Mr. Son recommended.

According to experts, the recently upgraded GPT-4o and Gemini 1.5 Pro models have proven that AI's intelligence has no limits. Therefore, in addition to encouraging businesses to develop AI anti-fraud tools and solutions, management agencies need to soon complete the legal framework on AI to stay ahead of the trend.

Lieutenant Colonel Nguyen Anh Tuan, Deputy Director of the National Population Data Center, said that it is necessary to soon issue documents regulating the ethics and responsibilities of domestic and foreign service providers in the process of developing, producing, applying, and using AI. At the same time, there should be specific regulations on technical standards for systems that use, apply, connect, and provide AI services. "It is necessary to research and apply AI projects to combat AI risks. Because AI is created by humans, is a product of knowledge, there will be variations of "good AI" and "bad AI", so it is possible to prevent the development of artificial intelligence by artificial intelligence itself" - Lieutenant Colonel Tuan noted.

Cybersecurity Challenges

Lieutenant Colonel Nguyen Anh Tuan acknowledged that AI is posing challenges to network security. Accordingly, malicious software supported by AI can be installed in document files, when users upload document files, this malicious code will penetrate the system. In addition, from special AI technologies, hackers can simulate the system, attack security holes. AI can create many applications with images, fake names of applications and websites of the Ministry of Public Security for people to download, install and provide information such as ID card number, login password. "AI will also develop its own software, hybrid malware between many malware lines, have more sophisticated security evasion mechanisms, automatically change flexible source codes" - Lieutenant Colonel Nguyen Anh Tuan warned.

Source: https://nld.com.vn/toi-pham-mang-co-them-bi-kip-tu-ai-196240601195415035.htm

Comment (0)