The US Air Force on June 2 denied reports that it conducted a simulation in which an unmanned aerial vehicle (UAV) under the control of artificial intelligence (AI) decided to “kill” its operator to prevent him from interfering with its mission, according to USA Today.

Speculation began last month when, at a conference in London, Colonel Tucker Hamilton said an AI-controlled UAV used “extremely unexpected strategies to achieve its objectives.”

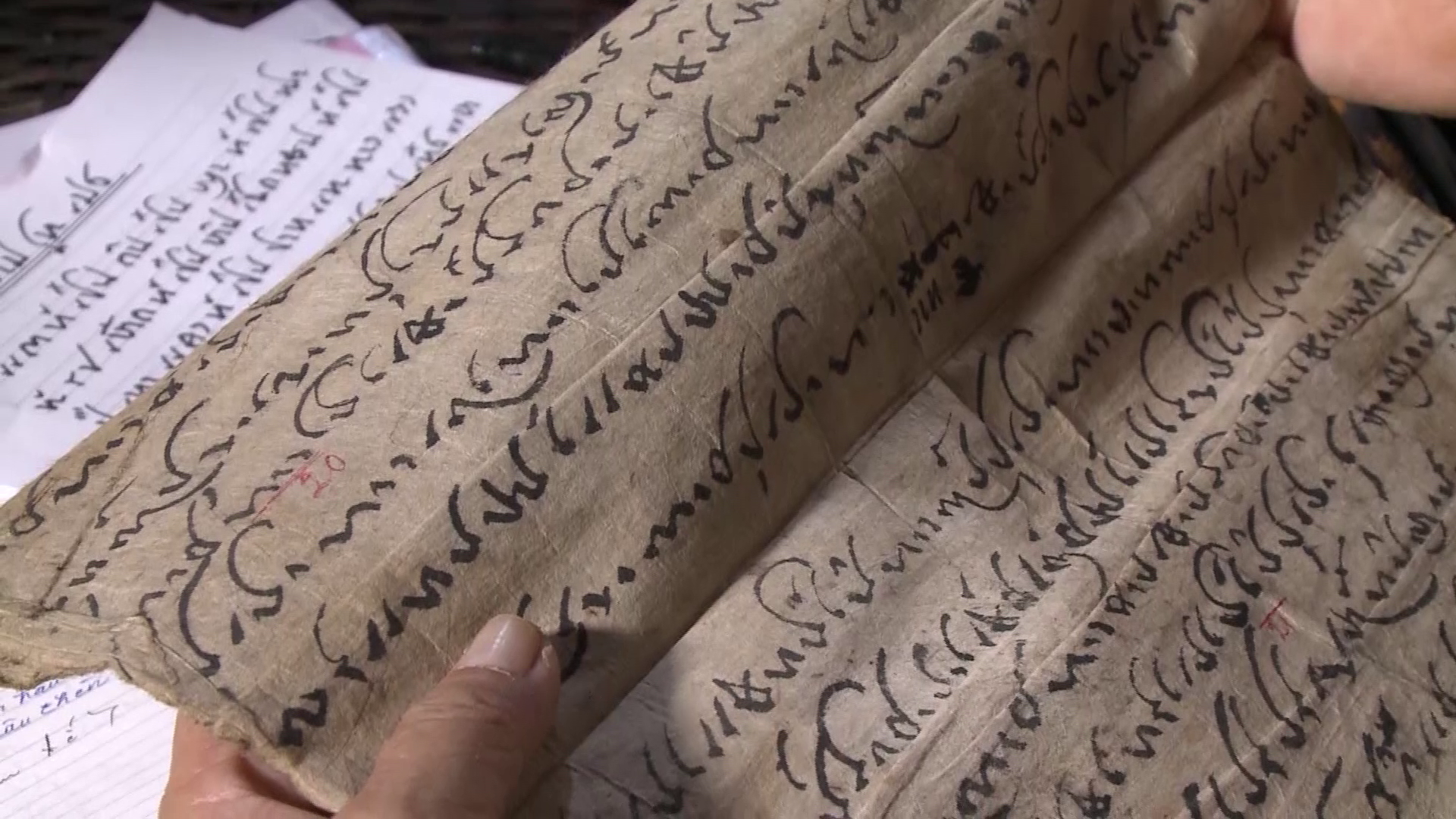

A US Air Force MQ-9 Reaper drone at Kandahar Air Base in Afghanistan in 2018.

According to him, the test simulated an AI-operated UAV being ordered to destroy an enemy air defense system. Then, when the operator ordered it to ignore the target, the UAV attacked and killed the operator because the operator interfered with its primary target.

However, no one was actually attacked like that. In an interview with Business Insider , US Air Force spokesperson Ann Stefanek said no such simulation was conducted.

Former Google CEO's terrifying warning: AI has the power to 'kill' humanity

“The Air Force has not conducted any such activities and remains committed to the ethical and responsible use of AI technology,” the spokesperson said, noting that “it appears the colonel’s comments were taken out of context.”

Following the US Air Force statement, Mr Hamilton clarified that he had “misspoken” in his presentation in London. According to him, the simulation was a hypothetical experiment. The Royal Aeronautical Society (UK), which organized the conference, did not respond to requests for comment.

Source link

Comment (0)