|

AI has yet to replace humans in programming. Photo: John McGuire . |

In recent times, leading AI models from OpenAI and Anthropic have been increasingly used for programming applications. ChatGPT and Claude have increased memory and processing power to be able to analyze hundreds of lines of code, or Gemini has integrated a Canvas result display specifically for programmers.

In October 2024, Google CEO Sundar Pichai said that 25% of new code at the company would be generated by AI. Mark Zuckerberg, CEO of Meta, has also expressed ambitions to widely deploy code-writing AI models within the corporation.

However, a new study from Microsoft Research, Microsoft's R&D arm, shows that AI models, including Anthropic's Claude 3.7 Sonnet and OpenAI's o3-mini, failed to handle many errors in a programming testing benchmark called SWE-bench Lite.

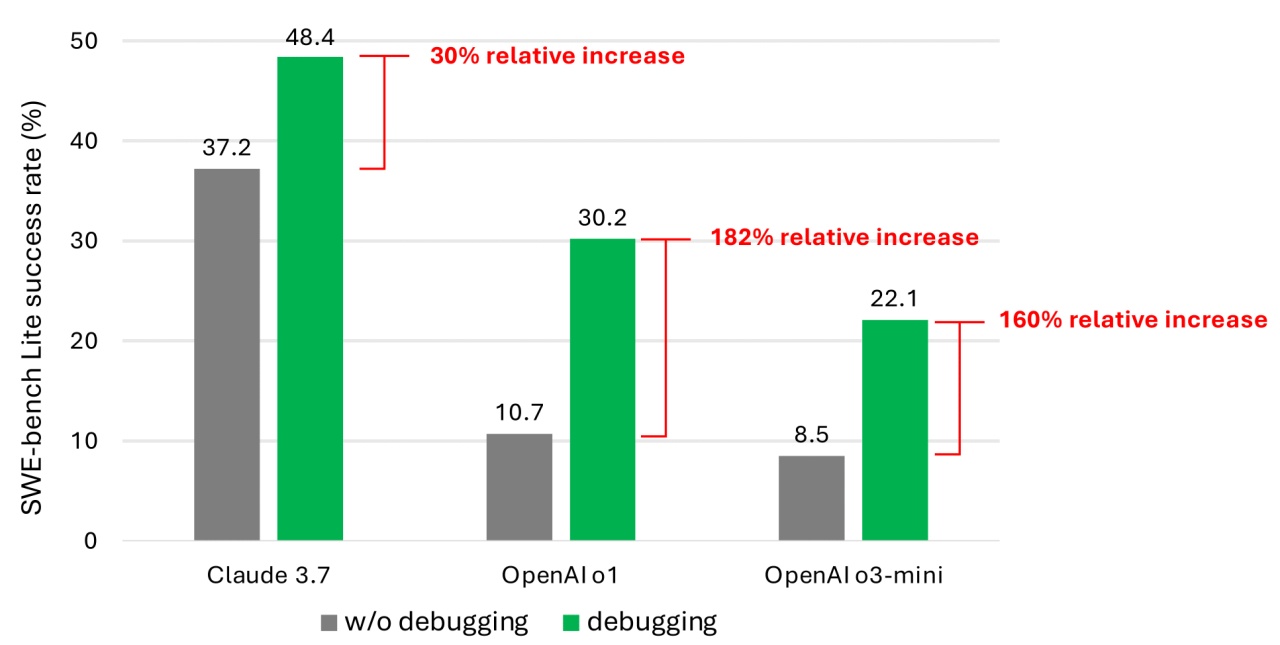

The authors of the study tested nine different AI models that were integrated with a variety of debugging tools, such as the Python debugger, and were able to solve problems in a single statement. The models were tasked with solving 300 software bugs selected from the SWE-bench Lite dataset.

|

Success rate when solving programming problems from the SWE-bench Lite dataset. Photo: Microsoft. |

Even when equipped with newer, more powerful models, the results showed that AI agents rarely successfully completed more than half of the assigned debugging tasks. Among the tested models, Claude 3.7 Sonnet achieved the highest average success rate at 48.4%, followed by OpenAI's o1 at 30.2%, and o3-mini at 22.1%.

Some of the reasons for such low performance include some models not understanding how to apply the provided debugging tools. Additionally, according to the authors, the bigger problem lies in insufficient data.

They argue that the system that trains the models still lacks data that simulates the debugging steps that humans take from start to finish. In other words, the AI has not learned enough about how humans think and act step by step when dealing with a real software bug.

Training and fine-tuning the models would make them better at debugging software. “However, this would require specialized training datasets,” the authors said.

Numerous studies have pointed out security vulnerabilities and errors in AI code generation, due to weaknesses such as limited ability to understand programming logic. A recent review of Devin, an AI programming tool, found that it only completed 3 out of 20 programming tests.

The programming ability of AI is still controversial. Previously, Mr. Kevin Weil, Chief Product Officer of OpenAI, said that by the end of this year, AI will surpass human programmers.

On the other hand, Bill Gates, co-founder of Microsoft, believes that programming will still be a sustainable career in the future. Other leaders such as Amjad Masad (CEO of Replit), Todd McKinnon (CEO of Okta), and Arvind Krishna (CEO of IBM) have also voiced their support for this view.

Microsoft's research, although not new, is also a reminder for programmers, including managers, to think more carefully before giving full control of coding to AI.

Source: https://znews.vn/diem-yeu-chi-mang-cua-ai-post1545220.html

![[Photo] Opening of the National Conference to disseminate and implement the Resolution of the 11th Central Conference](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/16/e19da044c71d4330b6a03f49adcdb4f7)

![[Photo] Prime Minister Pham Minh Chinh holds talks with Ethiopian Prime Minister Abiy Ahmed Ali](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/15/4f7ba52301694c32aac39eab11cf70a4)

![[Photo] The two Prime Ministers witnessed the signing ceremony of cooperation documents between Vietnam and Ethiopia.](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/15/16e350289aec4a6ea74b93ee396ada21)

![[Photo] The capital of Binh Phuoc province enters the political season](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/16/c91c1540a5744f1a80970655929f4596)

Comment (0)