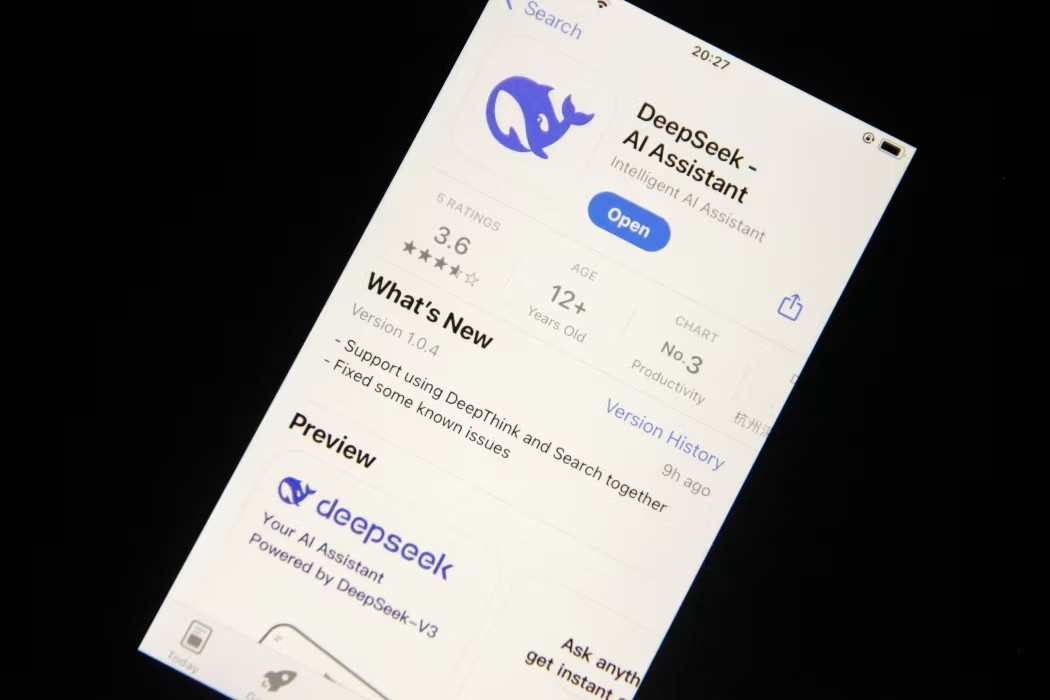

South Korea's Ministry of Industry is the latest agency to announce it is temporarily blocking employees from accessing an AI model from Chinese startup DeepSeek, citing security concerns.

Previously, the South Korean government on February 5 asked ministries and agencies to be cautious in using AI services, including DeepSeek and ChatGPT, in the workplace.

State-owned Hydro & Nuclear Power also announced a ban on AI services including DeepSeek earlier this month.

Similarly, the South Korean Ministry of National Defense also blocked access on computers used for military purposes.

The Yonhap News Agency said the country's Foreign Ministry limited DeepSeek to computers connected to external networks, but did not detail the security measures.

South Korea is the latest country to raise concerns about the Chinese-made AI model. Australia and Taiwan (China) have also previously said that DeepSeek poses security threats.

In January 2025, Italy's data protection authority blocked access to the chatbot after the Chinese startup failed to address privacy concerns.

In Europe, the US and India, governments are also looking into the potential risks of using DeepSeek.

On the Korean side, authorities plan to ask DeepSeek to explain how the company handles personal information.

A representative of Kakao Corp, the company that owns the popular chat app in Korea, said that they have banned employees from using DeepSeek, just one day after the company announced a partnership agreement with OpenAI.

In general, Korean tech companies are taking a more cautious approach to generative AI. SK Hynix, an AI chip maker, has limited its use of AI services and only allows access when necessary.

Meanwhile, Naver, one of the web portal operators in South Korea, said it has asked employees not to use AI services that store data outside the company.

(According to Yahoo News)

Source: https://vietnamnet.vn/han-quoc-cam-deepseek-2368963.html

Comment (0)