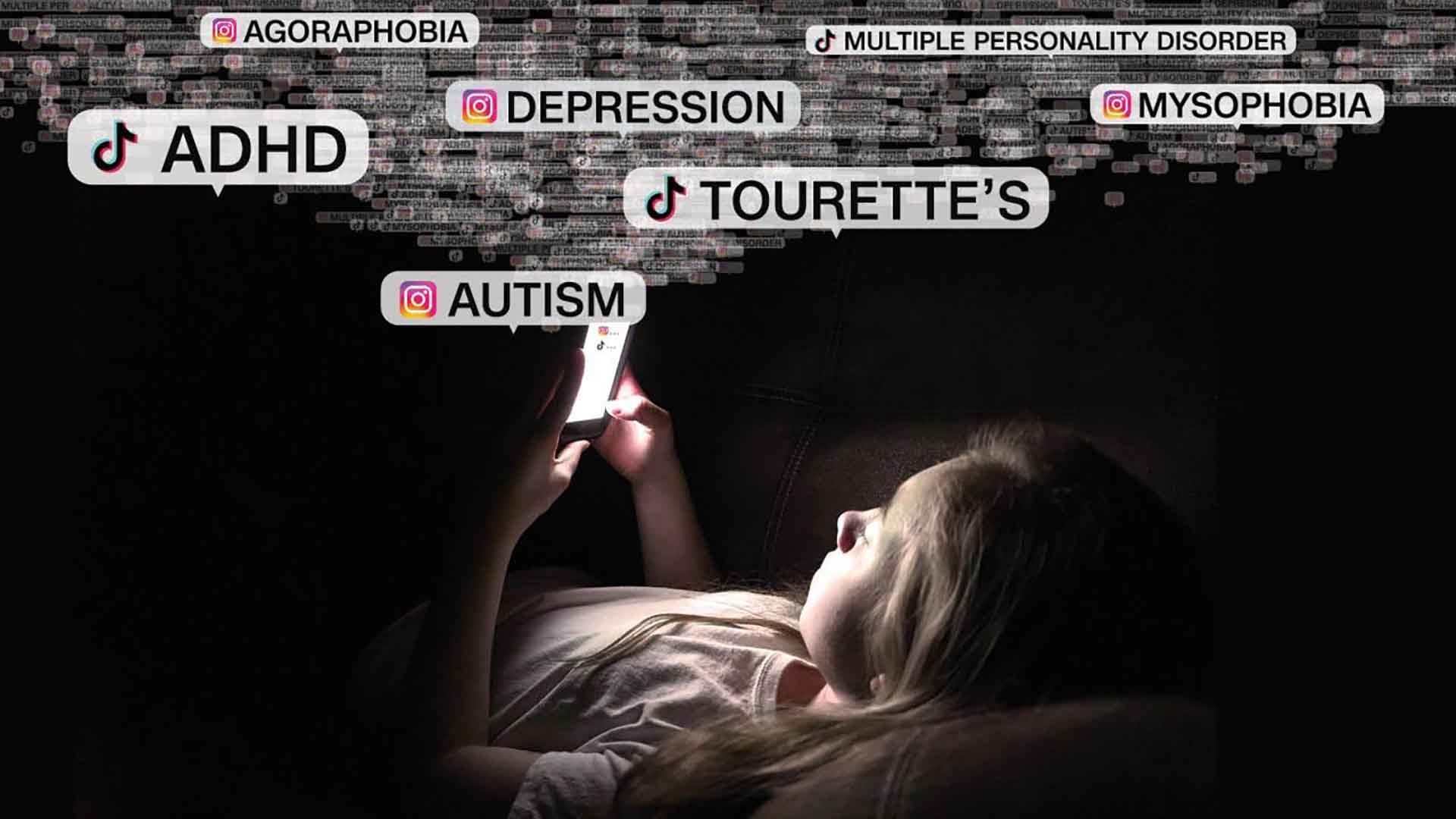

Recently, there has been a growing trend among teenagers to use social media to self-diagnose their mental health, including autism.

|

| Many Western teenagers use social media to self-diagnose their mental health. (Illustrative image. Source: CNN) |

Unlike most teenagers who browse TikTok and Instagram for entertainment, Erin Coleman's 14-year-old daughter uses social media to search for videos about mental health diagnosis.

Based on information from social media, the girl firmly believed she suffered from attention deficit hyperactivity disorder (ADHD), depression, autism, germophobia, and fear of leaving the house. Mrs. Coleman said, "Every week, my daughter came up with a different diagnosis. She thought she had the same thing as everyone else."

After undergoing mental health and medical examinations, doctors concluded that Mrs. Coleman's daughter was suffering from severe anxiety.

Mental health crisis

Social media platforms, including TikTok and Instagram, have come under intense scrutiny in recent years for their potential to lead young users to harmful content and exacerbate mental health crises among teenagers.

Consequently, more and more teenagers are using social media platforms like Instagram and TikTok to find resources and support for their mental health, and to self-treat in ways they deem appropriate for themselves.

Using the internet for self-diagnosis is nothing new. With the vast amount of information available online, children can obtain the mental health information they need and feel less alone.

However, self-diagnosis and misdiagnosis exacerbate the problem. Even worse, children may self-medicate for conditions they don't have. The more they search for this kind of content, the more similar videos and posts social media algorithms display.

Dr. Larry D. Mitnaul, a psychiatrist specializing in adolescent mental health in Wichita, Kansas, says the most common self-diagnoses he encounters in teenagers are ADHD, autism spectrum disorder, dissociative identity disorder, and multiple personality disorder, especially since 2021. “The consequence is that treatment and intervention are quite complicated,” he says, putting parents in a difficult position because finding help isn’t always easy.

Another parent, Julie Harper (USA), said her daughter, who was originally outgoing and friendly, changed during the Covid-19 lockdown in 2020, when she was 16 and diagnosed with depression. Although her condition improved with medication, her mood swings worsened, and new symptoms appeared after she started spending a lot of time watching TikTok.

Experts say that many social media users who post about mental disorders are often seen by teenagers as "trusted sources" because those users also suffer from the disorder discussed in the video, or because they identify themselves as experts on the topic.

Call to action

In May, the American College of Surgeons issued a warning about the “profoundly harmful” effects of social media use on children and called for increased research into its impact on adolescent mental health, as well as urging action from policymakers and social media platforms. According to Alexandra Hamlet, a New York City psychologist, social media companies should adjust their algorithms to detect when users are viewing too much content on a particular topic. She said, “They need to have notifications reminding users to pause and reflect on their social media habits.”

In a statement, Liza Crenshaw, a spokesperson for Meta, Instagram's parent company, said, “The company does not have specific safeguards beyond its Community Standards. These standards prohibit the promotion, encouragement, or glorification of things like dieting or self-harm.” Meta has created several programs, such as the Well-being Creator Collective, to guide content creators in designing positive, inspiring content that supports the physical and mental health of teenagers. Instagram has introduced several tools to limit late-night browsing, redirecting teenagers to a different topic if they have viewed content for too long.

Enhance control

Social media platforms now have tools to measure the harms of excessive use, especially among young people, but few measures to curb it. Nevertheless, some platforms and apps have begun to implement solutions.

For example, Snapchat, one of the most popular communication and social networking platforms among Western youth, has officially introduced a "Family Center" feature, allowing parents to partially monitor their children's social media use. Through this feature, parents can see their children's social media login frequency and the people they interact with online, even without being able to view the content of those interactions.

According to a warning issued on May 23rd by US Surgeon General Vivek Murthy, social media platforms must implement similar features because protecting minors is one of the top priorities for social media regulators in Western countries, particularly in Europe.

Accordingly, the development of social media is inevitable and needs to be regulated to ensure transparent and controllable growth, rather than being stifled. In the context of large technology companies like Google, Facebook, and TikTok increasingly exerting influence but facing few accountability obligations to the community, the role of governments in tightening control is essential. Besides the responsibility of technology companies, another crucial factor in ensuring a healthy social media environment is raising the awareness of each social media user and strengthening the vital role of education .

Source

![[Image] National Conference on studying, understanding, and implementing Resolutions No. 79 and No. 80 of the Politburo](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2026/02/25/1771990845625_anh-man-hinh-2026-02-25-luc-10-40-34.png)

Comment (0)