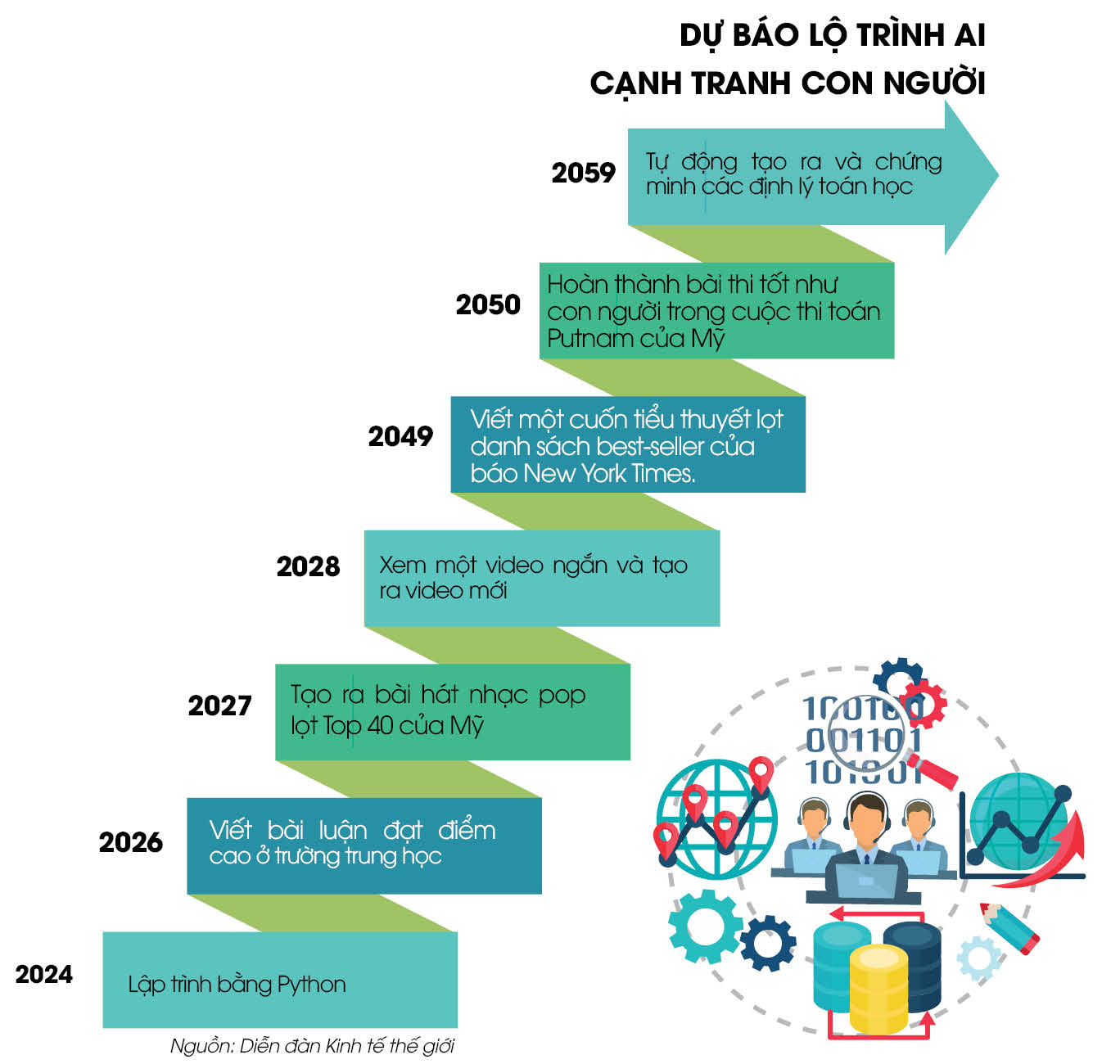

There has been much talk about the advantages of AI over human capabilities in many aspects, but where are the areas where machines still fall short? In other words, how should humans use generative AI based on a clear understanding of its strengths and weaknesses? What regulations should newsrooms set regarding the limits of using AI to ensure the accuracy and objectivity of information?

|

| Graphics: THANH HUYEN |

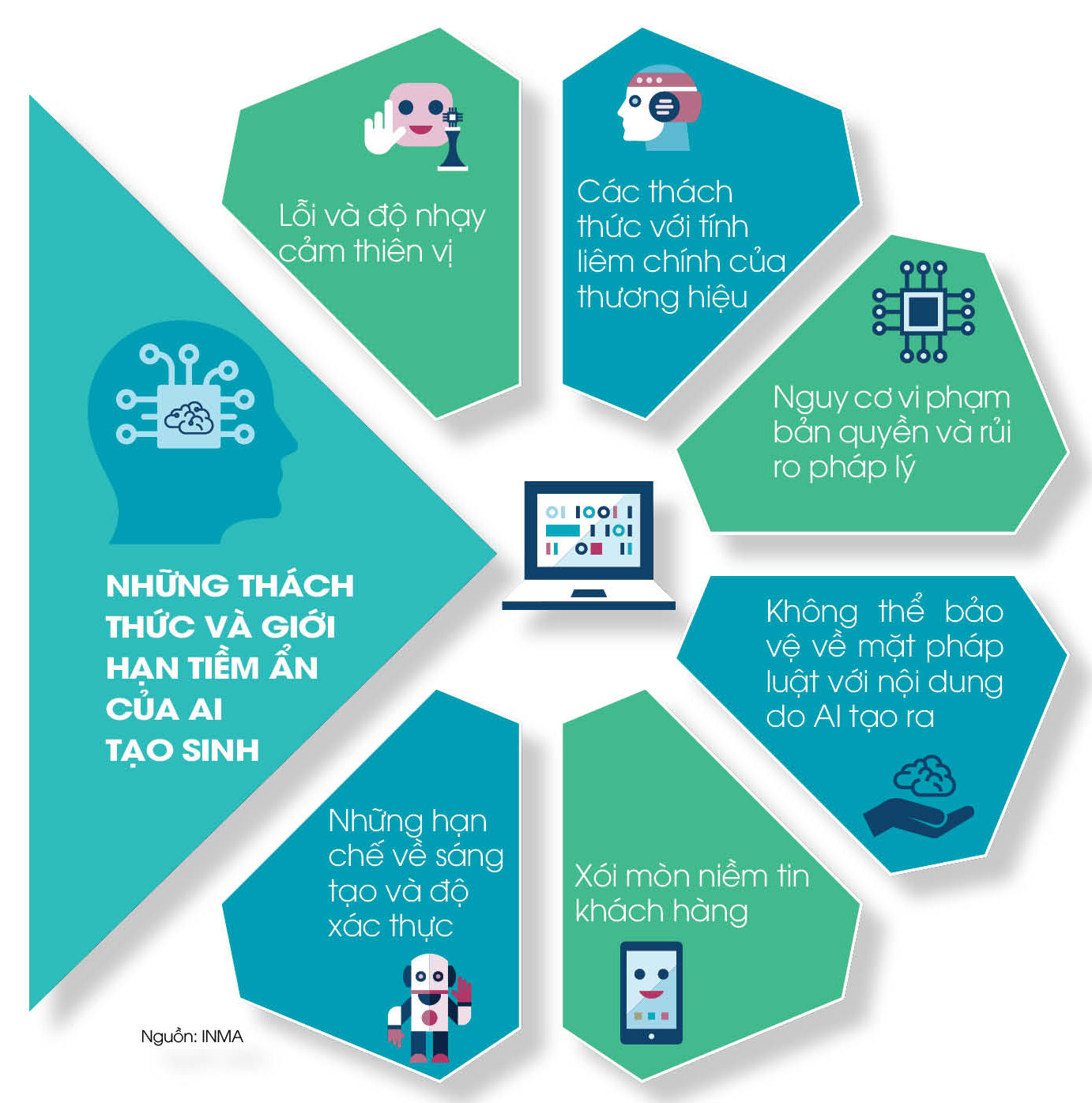

What are the weaknesses of AI?

When talking about generative AI, one might think that it has a lot of knowledge. But in fact, as many experts in the field have pointed out, what AI has is a lot of information, not knowledge. This distinction is subtle but important: for example, AI has information about an apple, but it does not “know” what an apple is. It can give a precise picture of an apple if asked, but it has never tasted an apple, never smelled or held an apple, and of course, it does not care what an apple is.

Journalism is made up of people with all the experiences that AI doesn’t have, and that’s part of why journalists are so essential to the distinction between information and knowledge that we’re talking about. That’s the remarkable insight shared recently on the International News Media Association (INMA) website by Karen Silverman, CEO and founder of Cantellus Group, a consulting firm that specializes in the strategy, oversight, and governance of AI and frontier technologies.

As generative AI technology becomes more widespread, concerns and backlash around its use in journalism have also grown, particularly around the notion that generative AI is seen as a substitute for original, intellectually demanding journalism. For newsrooms considering expanding the use of generative AI in their work, it is essential that they understand the limitations and risks of adopting these technologies from both a technical and business perspective.

In sharing his perspective on these issues, Justin Eisenband, CEO of FTI Consulting in Washington (USA), said that newsrooms can minimize the above limitations and risks with the right context and assessment. This will need to focus on applying “precise” generative AI to newsroom workflows to increase efficiency as well as staff experience.

For example, to avoid eroding reader trust, newsrooms should clearly indicate in the author name whether an article is partially or “fully” contributed by AI, and should also publicly disclose community guidelines around the use of AI in publishing content. In addition, newsrooms should develop clear policies and workflows around the use of AI to avoid legal and copyright violations, and to minimize the risk of errors or bias in reporting that may be due to errors in the original data used to train the tool.

An article on the World Economic Forum website points out specific technical challenges with AI, such as the availability of training data, which is not always possible. Next is the difficulty of AI in understanding unstructured data. While it can handle tabular information well, it is still difficult for it to process “noise” data, which is the majority of life.

Another big challenge with AI is that it is not self-aware, meaning it cannot explain the results of its work, such as why it wrote what it wrote, how it arrived at this result. And of course, AI does not have the ability to verify information, so it cannot distinguish whether the data given to it is correct or not. This can lead to problems related to authenticity, if AI is trained on disputed data, its answers are very likely to be wrong.

|

| Graphics: THANH HUYEN |

Expanding the application of AI generation

While most of the talk about generative AI in journalism today focuses on content development, we should also consider its application in many functions, including in the newsroom as well as in audience development and content distribution. For example, generative AI can be used to create content compatibility to support versions displayed on different platforms.

For example, when an article is written, edited, and finalized for publication, other versions can be automatically generated for publication on other platforms, such as social media summaries or newsletters, translated versions in other languages, and even audio formats for distribution on podcast channels.

Another area where generative AI can be useful is content processing and finishing. Editing, including color retouching and audio editing, can be expensive and time-consuming. However, generative AI can make suggestions and can also reduce the time it takes to copy edits from one frame or image across an entire video or image set.

In the areas of customer development and marketing, AI can also help by embedding metadata and optimizing content. While many publishers already use personalization in content marketing, advances in AI can help create even more personalized user experiences and promote content in a way that is sure to create better engagement with readers.

The technological advances we are creating today put enormous pressure on the distinction between data, information, and knowledge” Karen Silverman, CEO and founder of Cantellus Group, a consulting firm specializing in strategy, monitoring and governance of AI and cutting-edge technologies. |

DO DUONG

Source link

Comment (0)