The rapid development of artificial intelligence (AI) has raised concerns that it is developing faster than humans can understand its impact.

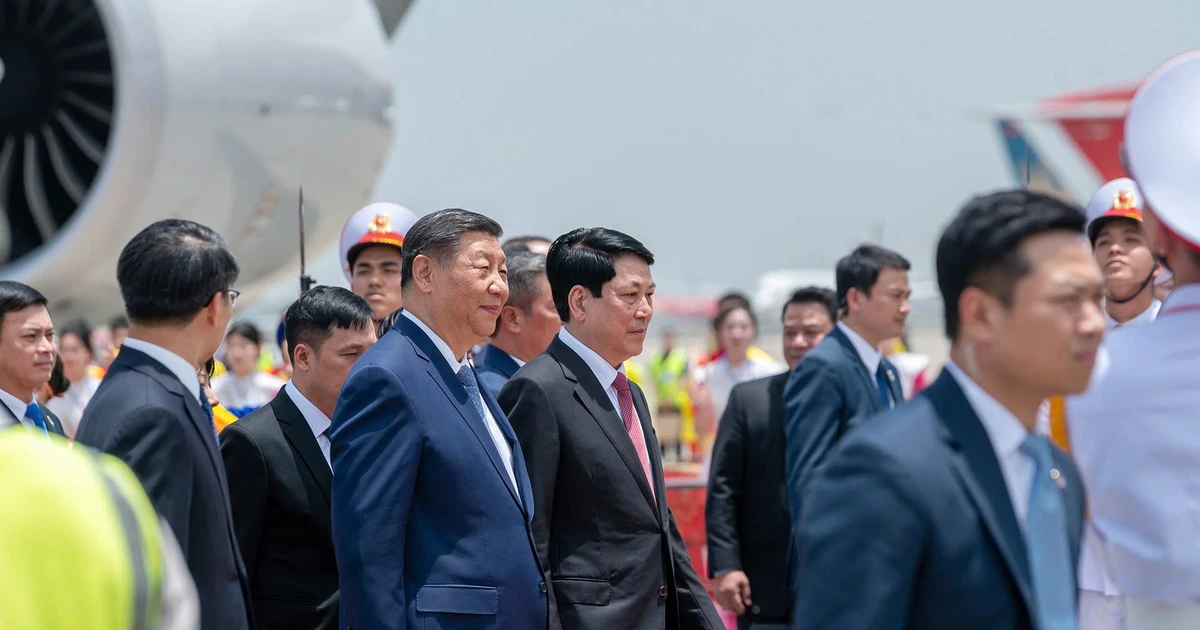

Photo: ST

The use of general AI has increased significantly after the emergence of tools like ChatGPT. While these tools have many benefits, they can also be misused in harmful ways.

To manage this risk, the United States and several other countries have secured agreements from seven companies, including Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI, to commit to safe practices in developing AI technology.

The White House announcement comes with its own jargon that may be unfamiliar to the average person, with words like “red teaming” and “watermarking.” Here are seven AI-related terms to watch out for.

Machine learning

This branch of AI aims to train machines to perform a specific task accurately by identifying patterns. The machine can then make predictions based on that data.

Deep learning

Creative AI tasks often rely on deep learning, a method that involves training computers using neural networks, a set of algorithms designed to mimic the neurons in the human brain, to make complex connections between patterns to generate text, images or other content.

Because deep learning models have multiple layers of neurons, they can learn more complex patterns than traditional machine learning.

Large language model

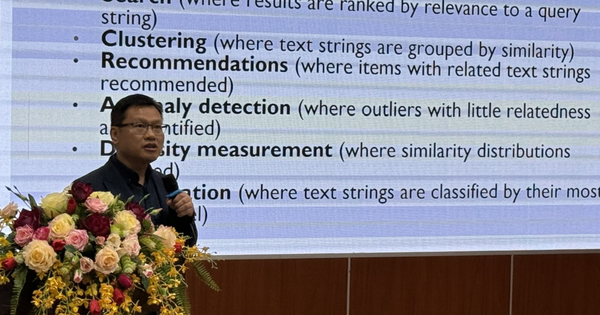

A large language model, or LLM, is trained on massive amounts of data and is intended to model language or predict the next word in a sequence. Large language models, such as ChatGPT and Google Bard, can be used for tasks including summarization, translation, and conversation.

Algorithm

A set of instructions or rules that allow machines to make predictions, solve problems, or complete tasks. Algorithms can provide shopping recommendations and help with fraud detection and customer service chat functions.

Bias

Because AI is trained on large datasets, it can incorporate harmful information into the data, such as hate speech. Racism and sexism can also appear in the datasets used to train AI, leading to misleading content.

AI companies have agreed to delve deeper into how to avoid harmful bias and discrimination in AI systems.

Red teaming

One of the commitments companies have made to the White House is to conduct “red teaming” inside and outside of AI models and systems.

“Red teaming” involves testing a model to uncover potential vulnerabilities. The term comes from a military practice where a team simulates an attacker’s actions to come up with strategies.

This method is widely used to test for security vulnerabilities in systems such as cloud computing platforms from companies like Microsoft and Google.

Watermarking

A watermark is a way to tell if an audio or video was created by AI. Facts checked for verification can include information about who created it, as well as how and when it was created or edited.

Microsoft, for example, has pledged to watermark images created by its AI tools. Companies have also pledged to the White House to “watermark” images or record their origins… to identify them as AI-generated.

Watermarks are also commonly used to track intellectual property violations. Watermarks for AI-generated images can appear as imperceptible noise, such as a slight change every seventh pixel.

However, watermarking AI-generated text can be more complex and may involve tweaking the word template, so that it can be identified as AI-generated content.

Hoang Ton (according to Poynter)

Source

![[Photo] General Secretary and President of China Xi Jinping arrives in Hanoi, starting a State visit to Vietnam](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/14/9e05688222c3405cb096618cb152bfd1)

![[Photo] General Secretary To Lam chairs the third meeting to review the implementation of Resolution No. 18-NQ/TW](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/14/10f646e55e8e4f3b8c9ae2e35705481d)

Comment (0)