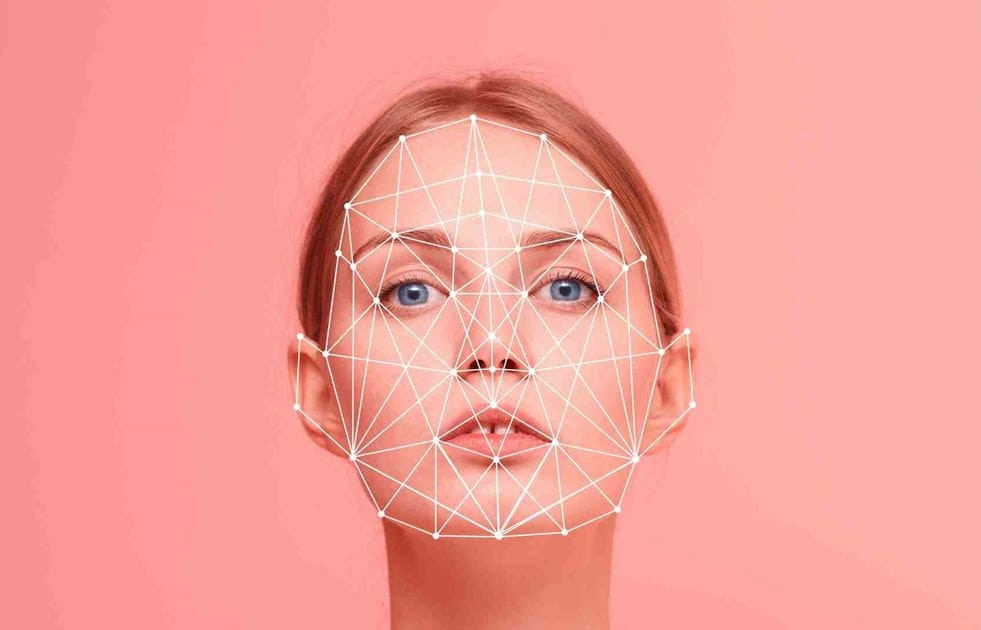

Deepfake is a term created by combining 'Deep Learning' and 'Fake'. Simply put, this is the technology of simulating and creating fake audio, image or even video products.

Since the explosive development of artificial intelligence (AI) , the problem of deepfake has become increasingly popular, creating waves of misinformation spreading in the press. Therefore, proactively authenticating the origin of images and videos is an urgent issue for the world's leading camera companies.

Sony, Canon and Nikon are expected to launch digital cameras that support digital signatures directly on their Mirrorless cameras (or DSLR digital cameras). The effort to implement digital signatures on cameras is an extremely important measure, creating evidence of the origin and integrity of images.

These digital signatures will include information about the date, time, location, photographer, and are tamper-proof. This is especially important for photojournalists and other professionals whose work requires authentication.

Three camera industry giants have agreed on a global standard for digital signatures compatible with the online verification tool Verify. Launched by a coalition of global news organizations, tech companies and camera manufacturers, the tool will enable free verification of the authenticity of any image. If images are created or modified using AI, Verify will flag them as 'No content verification'.

The importance of anti-deepfake technologies stems from the rapid rise of deepfakes of famous figures such as former US President Donald Trump and Japanese Prime Minister Fumio Kishida.

Additionally, researchers from Tsinghua University in China have developed a new generative AI model capable of generating about 700,000 images per day.

In addition to camera makers, other tech companies are joining the fight against deepfakes. Google has released a tool to digitally watermark AI-generated images, while Intel has developed technology that analyzes skin tone changes in photos to help determine their authenticity. Hitachi is also working on technology to prevent online identity fraud.

The new technology is expected to be available in early 2024. Sony plans to promote the technology to the media and has already conducted field trials in October 2023. Canon is collaborating with Thomson Reuters and the Starling Data Preservation Lab (a research institute founded by Stanford University and the University of Southern California) to further refine the technology.

Camera makers hope the new technology will help restore public trust in images, which in turn shape our perception of the world.

(according to OL)

Paid $5,000 for Facebook to spread deepfake investment scam video

New malware takes control of smartphones, deepfake videos are getting more sophisticated

Deepfake videos are getting more sophisticated and realistic

What to do to avoid falling into the trap of Deepfake video calls that scam money transfers?

Deepfakes are being exploited to put victims' faces in porn videos

Source

Comment (0)