During a US Senate hearing on Wednesday (January 31), a senator accused Facebook founder Mark Zuckerberg of unintentionally creating a “killer product.” Zuckerberg later apologized to parents whose children were affected by the company’s online platform.

“I’m sorry for everything you’ve been through,” Zuckerberg told parents at the hearing, some of whom held up photos of their children. “No one should ever have to go through what your family has endured.”

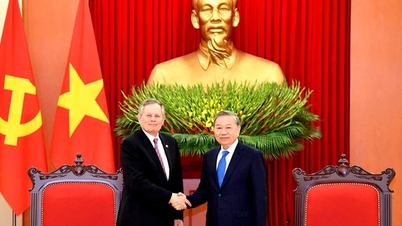

Mark Zuckerberg at a US Senate hearing on January 31, 2024. Photo: DW

This is a session called "Big Tech and the Online Child Sexual Exploitation Crisis," where the US Senate Judiciary Committee convened tech executives such as CEO Zuckerberg, TikTok CEO Chouzi Chew, Snapchat co-founder Evan Spiegel, Discord CEO Jason Citron, and X social network CEO Linda Yaccarino to discuss the issue of the same name.

In his opening remarks, Senate Committee Chairman Dick Durbin said technology companies must be held accountable for many of the dangers children face online.

“Design choices or companies that fail to build trust and safety, and relentlessly attract users and profits at the expense of basic safety regulations, put our children at risk,” he said.

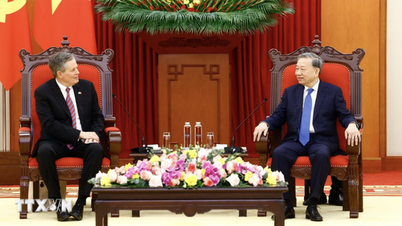

Meanwhile, Senator Lindsey Graham said: "Mr. Zuckerberg, I know you don't mean it like that, but you have blood on your hands. You have a product that is killing people."

Senator Lindsey told CEO Zuckerberg that Meta was creating a "killer product." Photo: UPI Photo

Zuckerberg told lawmakers that keeping children safe online has been a challenge since the internet began. "As criminals evolve their tactics, we have to evolve our defenses," the Meta CEO said.

The tech billionaire added that research shows that on balance, social media does not harm young people's mental health.

"As a father of three young children myself, I know that the issues we are discussing today are horrific and every parent's nightmare," said TikTok CEO Chouzi Chew.

He revealed plans to invest more than $2 billion in trust and safety on his social media platform. "This year alone, we have 40,000 safety experts working on this," Chew said.

Meta also said its 40,000 employees work on online safety and $20 billion has been invested since 2016 to make the platform safer.

Meanwhile, the focus of the session was Meta, the company that owns the world's leading platforms Facebook and Instagram, which said it would block direct messages from strangers to teenagers.

Meta also tightened restrictions on teen content on Instagram and Facebook, making it harder for teens to access posts discussing suicide, self-harm, or eating disorders.

Hoai Phuong (according to AP, AFP, DW)

Source

![[Photo] Nearly 3,000 students moved by stories about soldiers](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/5/17/21da57c8241e42438b423eaa37215e0e)

![[Infographic] Numbers about the 2025 High School Graduation Exam in Dong Thap Province](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/5/17/c6e481df97c94ff28d740cc2f26ebbdc)

Comment (0)