The turning point letter

Reuters on November 23 quoted exclusive sources as saying that four days before the dismissal of CEO Sam Altman, some researchers at OpenAI sent a letter to the company's board of directors warning of a powerful artificial intelligence (AI) discovery that could threaten humanity. The letter had never been mentioned before and the AI algorithm mentioned above was an important development before Mr. Sam Altman, OpenAI's co-founder, was fired on November 17. He returned to this position on November 21 (US time) after two negotiations with OpenAI. According to sources, the letter was one factor in the list of reasons leading to the decision to fire OpenAI's board of directors, although in the official announcement, the company only said that Mr. Altman "was not consistent and straightforward in communicating with the board of directors".

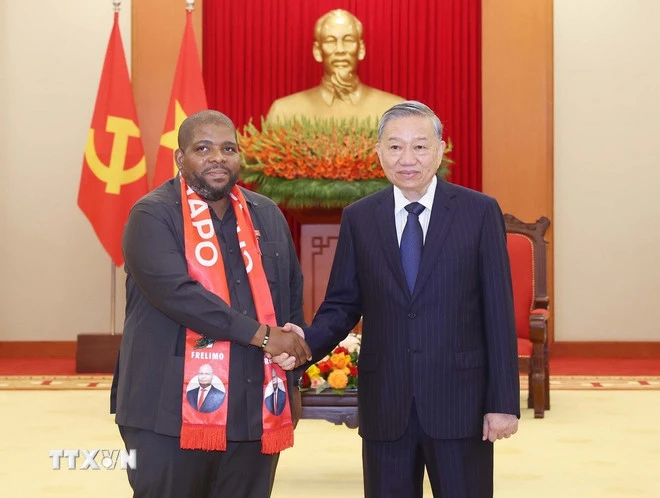

Mr. Altman ( right ) and leaders of technology companies discussed at the APEC conference in San Francisco (USA) on November 16.

One of OpenAI’s longtime executives, Mira Murati, mentioned a project called Q* to employees on Nov. 22 and said the company’s board received a letter before Altman was fired, according to the people. One of the sources said OpenAI has made progress on Q*, which could be a breakthrough in the quest for superintelligence, also known as artificial general intelligence (AGI).

Although the model is only able to do elementary school-level math, solving such problems makes researchers optimistic about Q*’s future success. Researchers see math as a prerequisite for developing generative AI. Generative AI can currently write and translate languages, although the answers to the same questions can be very different. But mastering math, where there is only one right answer, implies that AI will be able to reason better, like humans. Researchers believe this could be applied to new scientific research.

Sam Altman returns as CEO of OpenAI

Potential danger?

In a letter to OpenAI’s board, the researchers outlined the potential power and dangers of AI, according to the people. Computer scientists have long discussed the dangers posed by superintelligent machines, such as whether they might decide to destroy humanity for their own benefit. Against that backdrop, Altman has led efforts to turn ChatGPT into one of the fastest-growing software applications in history and attract the investment and computing resources needed to move closer to AGI. In addition to announcing a slew of new tools at an event this month, Altman told world leaders in San Francisco last week that he believed AGI was within reach. A day later, he was fired by OpenAI’s board. OpenAI and Altman did not immediately respond to requests for comment on the letter.

Concerned about the potential risks posed by AI, many Western governments and tech companies have agreed on a new safety testing regime. However, according to AFP, UN Secretary-General Antonio Guterres said the world was still "playing catch-up" in efforts to regulate AI, which risks having long-term negative consequences for everything from jobs to culture.

OpenAI, Microsoft sued over copyright

A group of non-fiction authors has filed a lawsuit against OpenAI and Microsoft, accusing them of training a tool called ChatGPT to copy their work without their consent, The Hill reported on November 23. In the lawsuit filed in federal court in New York, the lead plaintiff, Julian Sancton, said he and other authors have not received any compensation for the works copied by AI, while OpenAI and Microsoft have achieved financial success from commercializing their work, earning billions of dollars in revenue from AI products. According to Reuters, an OpenAI spokesperson declined to comment on the lawsuit, while a Microsoft representative did not immediately respond to a request for comment.

Source link

![[Photo] People lined up in the rain, eagerly receiving the special supplement of Nhan Dan Newspaper](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/28/ce2015509f6c468d9d38a86096987f23)

![[Photo] Special supplement of Nhan Dan Newspaper spreads to readers nationwide](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/28/0d87e85f00bc48c1b2172e568c679017)

![[Photo] A long line of young people in front of Nhan Dan Newspaper, recalling memories of the day the country was reunified](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/28/4709cea2becb4f13aaa0b2abb476bcea)

![[Photo] Signing ceremony of cooperation and document exchange between Vietnam and Japan](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/28/e069929395524fa081768b99bac43467)

![[Photo] General rehearsal of the parade to celebrate the April 30th holiday](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/27/108ed9566ab24a16a67429edcafccac2)

![[Photo] Readers in Dong Nai are excited about the special supplement of Nhan Dan Newspaper](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/4/28/82cdcb4471c7488aae5dbc55eb5e9224)

Comment (0)