The tech giant is under increasing pressure in the US and Europe over allegations that its apps are addictive and causing mental health problems among young people.

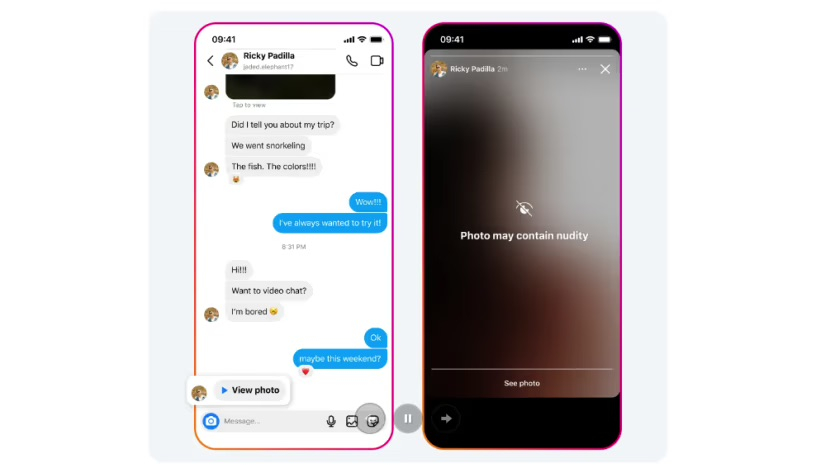

When someone receives an Instagram message containing a nude photo, the image will automatically be blurred below the warning screen. Photo: Meta

Instagram's direct message protection feature will use on-device machine learning to analyze whether images sent through the service contain nudity, Meta said.

This feature will be enabled by default for users under 18, and Meta will notify adults to encourage them to enable it.

“Because the images are analyzed on the device itself, nudity protection will also work in end-to-end encrypted chats, where Meta will not have access to these images – unless someone chooses to report them to us,” the company said.

Unlike Meta's Messenger and WhatsApp apps, Instagram direct messages aren't encrypted, but the company says it plans to roll out encryption to the photo-sharing service.

Meta also said it is developing technology to help identify accounts that may be involved in sextortion scams, and it is testing enabling new notifications for users who may have interacted with those accounts.

In January, Meta said it would hide more content from teens on Facebook and Instagram, adding that this would make it harder for young people to access sensitive content like suicide, self-harm and eating disorders.

Thirty-three US states, including California and New York, sued Meta in October 2023, saying the tech company repeatedly lied to the public about the dangers of its platform. The European Commission has also ordered Meta to protect children from illegal and harmful content.

Mai Anh (according to CNA)

Source

![[Photo] Prime Minister Pham Minh Chinh and Prime Minister of the Kingdom of Thailand Paetongtarn Shinawatra attend the Vietnam-Thailand Business Forum 2025](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/5/16/1cdfce54d25c48a68ae6fb9204f2171a)

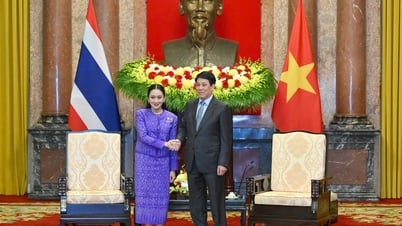

![[Photo] President Luong Cuong receives Prime Minister of the Kingdom of Thailand Paetongtarn Shinawatra](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/5/16/52c73b27198a4e12bd6a903d1c218846)

Comment (0)