GPU is the brain of AI computer

Simply put, the graphics processing unit (GPU) acts as the brain of the AI computer.

As you may know, the central processing unit (CPU) is the brain of the computer. The advantage of a GPU is that it is a specialized CPU that can perform complex calculations. The fastest way to do this is to have groups of GPUs solve the same problem. However, training an AI model can still take weeks or even months. Once built, it is placed in a front-end computer system and users can ask questions to the AI model, a process called inference.

An AI computer containing multiple GPUs

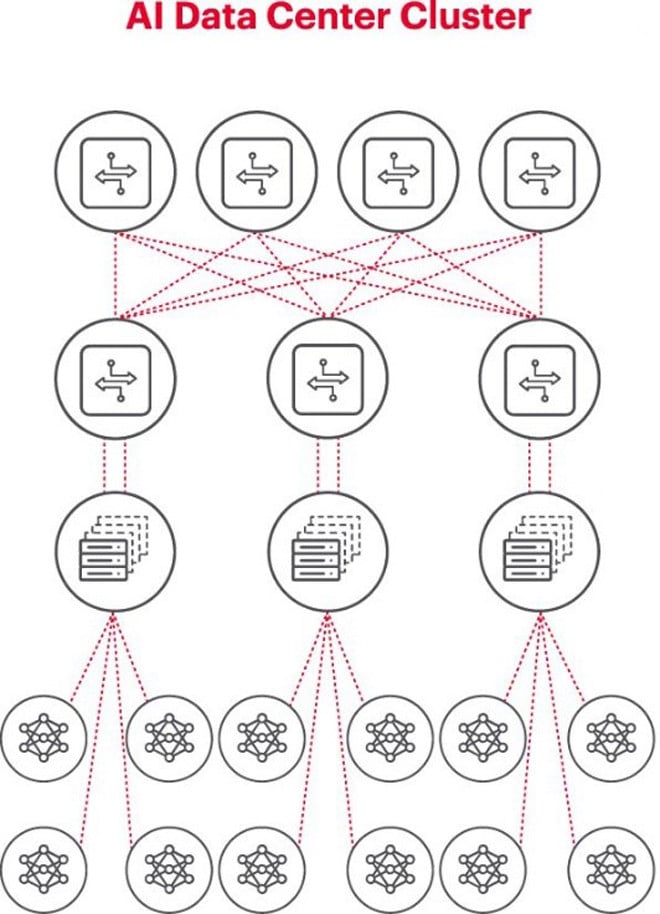

The best architecture for AI problems is to use a cluster of GPUs in a rack, connected to a switch on top of the rack. Multiple GPU racks can be connected in a hierarchy of networking. As the problem becomes more complex, the GPU requirements increase, and some projects may need to deploy clusters of thousands of GPUs.

Each AI cluster is a small network

When building an AI cluster, it is necessary to set up a small computer network to connect and allow GPUs to work together and share data efficiently.

The figure above illustrates an AI Cluster where the circles at the bottom represent the workflows running on GPUs. The GPUs connect to the top-of-rack (ToR) switches. The ToR switches also connect to the network backbone switches shown above the diagram, demonstrating the clear network hierarchy required when multiple GPUs are involved.

Networks are a bottleneck in AI deployment

Last fall, at the Open Computer Project (OCP) Global Summit, where delegates were working to build the next generation of AI infrastructure, delegate Loi Nguyen from Marvell Technology made a key point: “networking is the new bottleneck.”

Technically, high packet latency or packet loss due to network congestion can cause packets to be re-sent, significantly increasing job completion time (JCT). As a result, millions or tens of millions of dollars worth of GPUs are wasted by businesses due to inefficient AI systems, costing businesses both revenue and time to market.

Measurement is a key condition for successful operation of AI networks

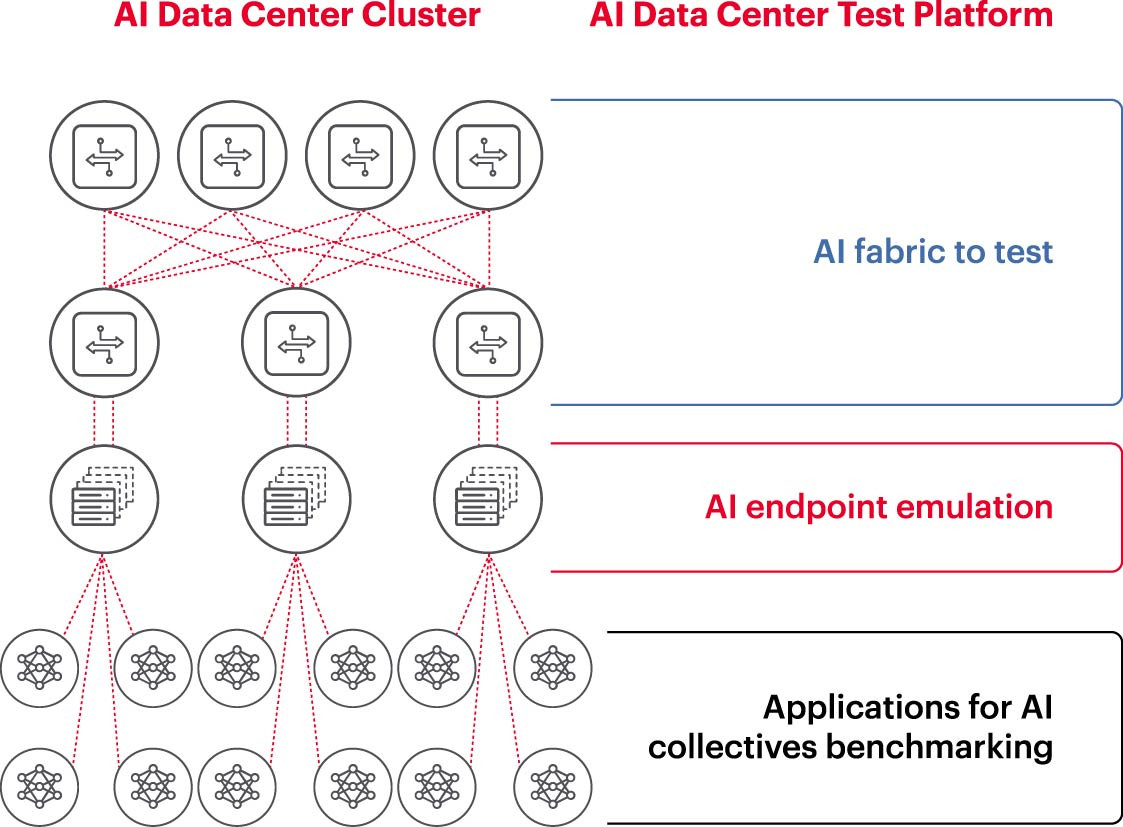

To run an AI cluster effectively, GPUs need to be able to be fully utilized to shorten the training time and put the learning model into use to maximize the return on investment. Therefore, it is necessary to test and evaluate the performance of the AI cluster (Figure 2). However, this task is not easy, because in terms of system architecture, there are many settings and relationships between GPUs and network structures that need to complement each other to solve the problem.

This creates many challenges in measuring AI networks:

- Difficulty in reproducing entire production networks in the lab due to limitations in cost, equipment, shortage of skilled network AI engineers, space, power and temperature.

- Measurement on the production system reduces the available processing capacity of the production system itself.

- Difficulty in accurately reproducing the problems due to differences in scale and scope of the problems.

- The complexity of how GPUs are collectively connected.

To address these challenges, businesses can test a subset of the recommended setups in a lab environment to benchmark key metrics such as job completion time (JCT), the bandwidth the AI team can achieve, and compare it to switching platform utilization and cache utilization. This benchmarking helps find the right balance between GPU/processing workload and network design/setup. Once satisfied with the results, the computer architects and network engineers can take these setups to production and measure new results.

Corporate research labs, academic institutions, and universities are working to analyze every aspect of building and operating effective AI networks to address the challenges of working on large networks, especially as best practices continue to evolve. This collaborative, repeatable approach is the only way for companies to perform repeatable measurements and rapidly test “what-if” scenarios that are the foundation of optimizing networks for AI.

(Source: Keysight Technologies)

Source: https://vietnamnet.vn/ket-noi-mang-ai-5-dieu-can-biet-2321288.html

![[Photo] Prime Minister Pham Minh Chinh meets with South African President Matamela Cyril Ramaphosa](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/10/23/1761226081024_dsc-9845-jpg.webp)

![[Photo] President Luong Cuong holds talks with South African President Matamela Cyril Ramaphosa](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/10/23/1761221878741_ndo_br_1-8416-jpg.webp)

![[Photo] Prime Minister Pham Minh Chinh chairs meeting on railway projects](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/10/23/1761206277171_dsc-9703-jpg.webp)

Comment (0)