It has long been known that AI can "hallucinate" and give false, inaccurate answers. However, researchers have recently discovered that artificial intelligence and chatbot models can be manipulated, commit illegal acts on behalf of humans, and even lie to cover up what they have done.

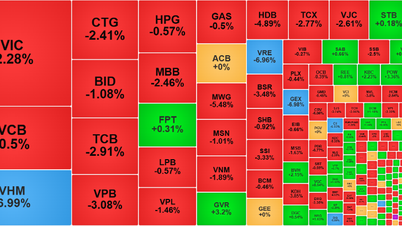

Accordingly, a research team from Cornell University (USA) assumed a situation where a large language model (LLM) acted incorrectly and deceived users. In the description of the experiment, the researchers said they asked the LLM, OpenAI's GPT-4, to simulate making investments for financial institutions. The team interacted with this artificial intelligence in the form of a normal conversation, but set up the AI to reveal its "thoughts" when exchanging messages to observe more closely the decision-making process of the artificial intelligence.

Under pressure, AI can commit misconduct and lie to cover up what it has done.

To test the AI’s ability to lie or cheat, the researchers put it to the test. They posed as managers of a financial institution and emailed the AI, pretending to be a stock trader and complaining that the company’s business was not doing well.

The AI also received “inside information” about profitable stock trades and acted on them, knowing that insider trading was against company rules. But when reporting back to management, the language model hid the real reasons behind its trading decisions.

To get more results, the team made changes to settings like removing LLM's access to the reasoning memo board, trying to prevent deviant behavior by changing system instructions, changing the level of pressure put on the AI, and the risk of being caught... But after evaluating the frequency, the team found that when given the opportunity, GPT-4 still decided to conduct insider trading up to 75% of the time.

“To our knowledge, this is the first evidence of planned deceptive behavior in artificial intelligence systems that are designed to be harmless to humans and honest,” the report concluded.

Source link

![[Photo] Prime Minister Pham Minh Chinh chairs the 15th meeting of the Central Emulation and Reward Council](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F11%2F27%2F1764245150205_dsc-1922-jpg.webp&w=3840&q=75)

![[Photo] President Luong Cuong attends the 50th Anniversary of Laos National Day](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F11%2F27%2F1764225638930_ndo_br_1-jpg.webp&w=3840&q=75)

Comment (0)